As software testing evolves, teams are increasingly comparing LLM testing and manual software testing to achieve better QA outcomes. Traditional manual testing relies on human-driven test cases, while LLM models and LLM AI introduce intelligent, scalable approaches through AI testing tools and AI automation testing techniques.

These AI-driven software testing methods help reduce test cycle times and increase test coverage by utilizing automated QA testing tools. By leveraging AI automation tools, QA teams can optimize workflows and enhance accuracy with less effort.

Whether using Selenium automated testing, manual testing tools, or advanced AI testing systems, understanding the benefits of each approach is key. This comparison supports better decision-making in selecting software testing tools for modern QA testing software tools strategies.

✨ LLM vs Traditional Testing Overview

📌 Purpose: Explore how AI-powered LLM testing improves software quality faster than traditional methods

📌 What to Expect: Understand key differences in accuracy, cost, learning curve, and tool integration

📌 Tools and Techniques: Discover top AI testing tools and automation frameworks used in real-world QA

📌 Outcome: Learn which approach fits best for better ROI and modern development needs

What Is Traditional Software Testing?

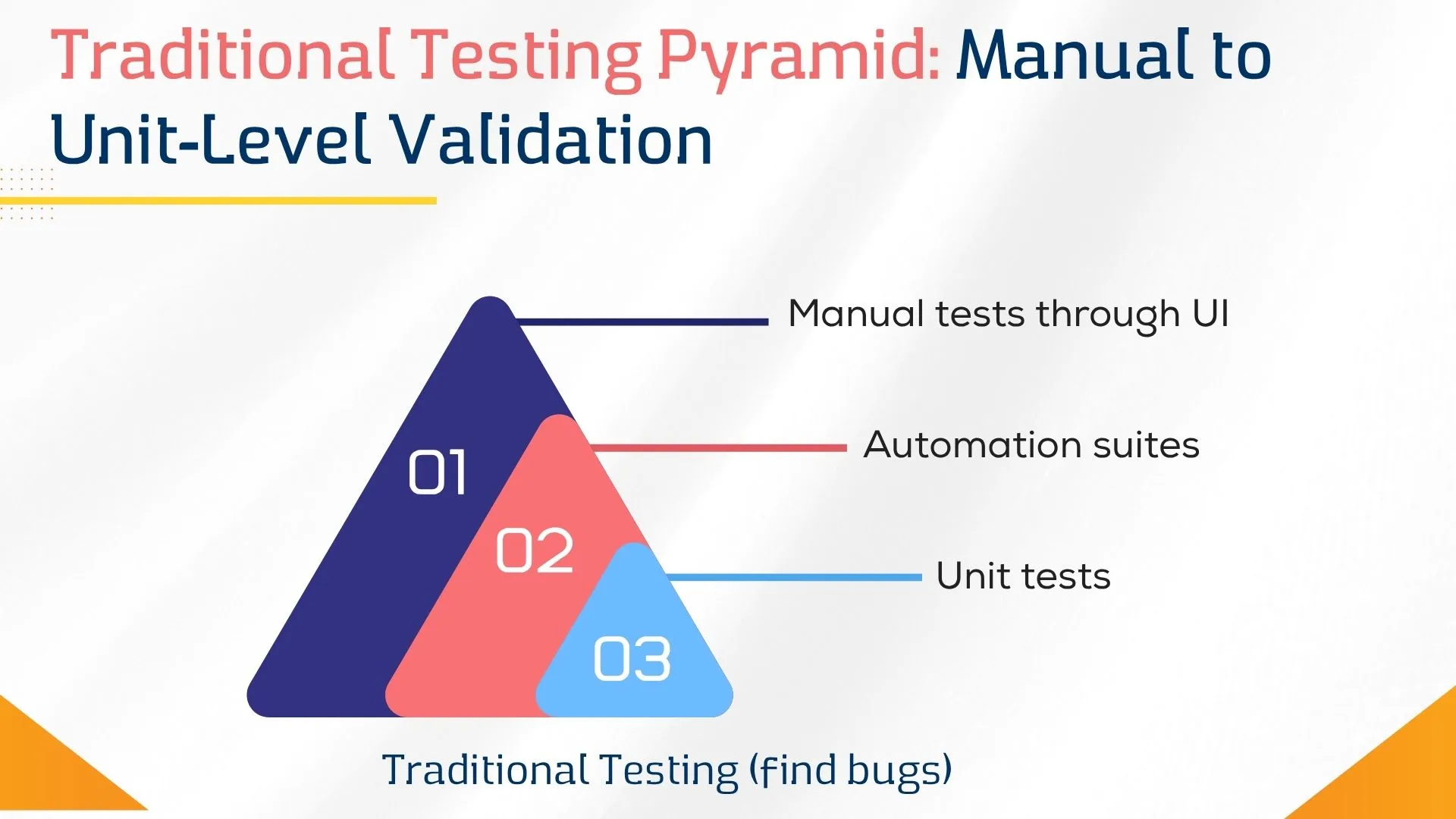

Traditional software testing refers to manual or script-based testing processes performed without the use of advanced AI-driven automation. It focuses on validating software features, detecting bugs, and ensuring software quality assurance through predefined test cases.

Teams often rely on manual testing tools and qa testing software tools to evaluate user flows and edge cases, making it ideal for applications requiring detailed human judgment and visual testing.

While effective for legacy systems and exploratory testing, this approach can be time-consuming and less scalable for DevOps pipelines. Unlike AI-powered testing tools, traditional methods struggle with complex testing processes and the need for continuous integration.

However, for projects with static requirements or minimal customer queries, traditional manual software testing still plays a crucial role in the overall software testing strategy.

What Is LLM-Driven Testing?

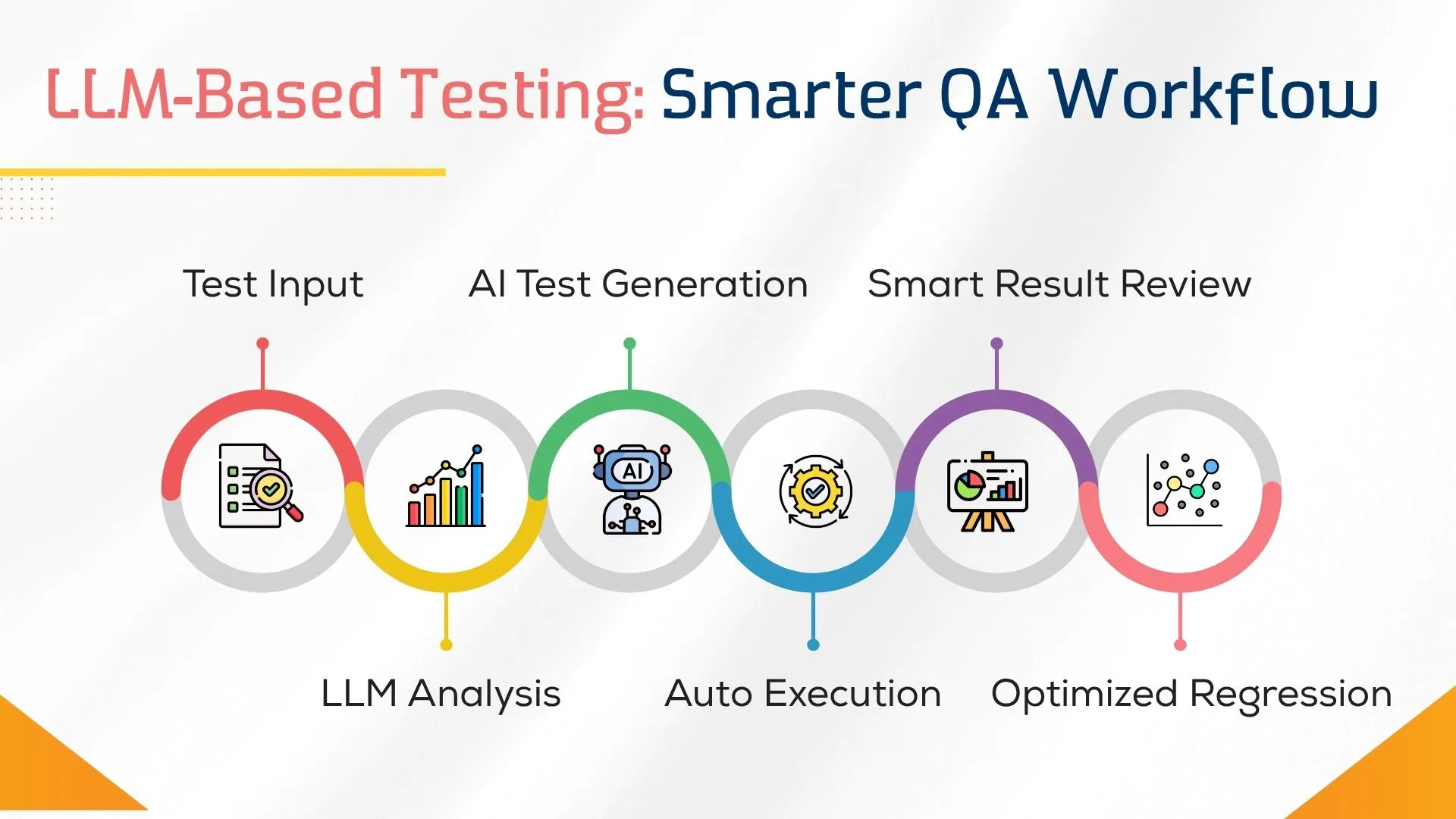

LLM-driven testing leverages large language models (LLMs) like GPT-4 Omni to automate and enhance software testing workflows. Unlike traditional approaches, it uses transformer-based deep learning models and self-attention mechanisms to understand context, generate intelligent test cases, and resolve semantic similarity issues.

These AI testing tools streamline automated test generation, reduce manual effort, and improve accuracy across software pipelines. By supporting data-driven testing insights and AI-driven test automation, LLMs optimize testing tools for complex logic, user interactions, and evolving product features.

LLM AI systems also assist with regression suite optimization and align with continuous integration practices. Whether testing on an e-commerce platform or within software unit testing, LLM models enhance speed, precision, and long-term ROI through intelligent workflow automation.

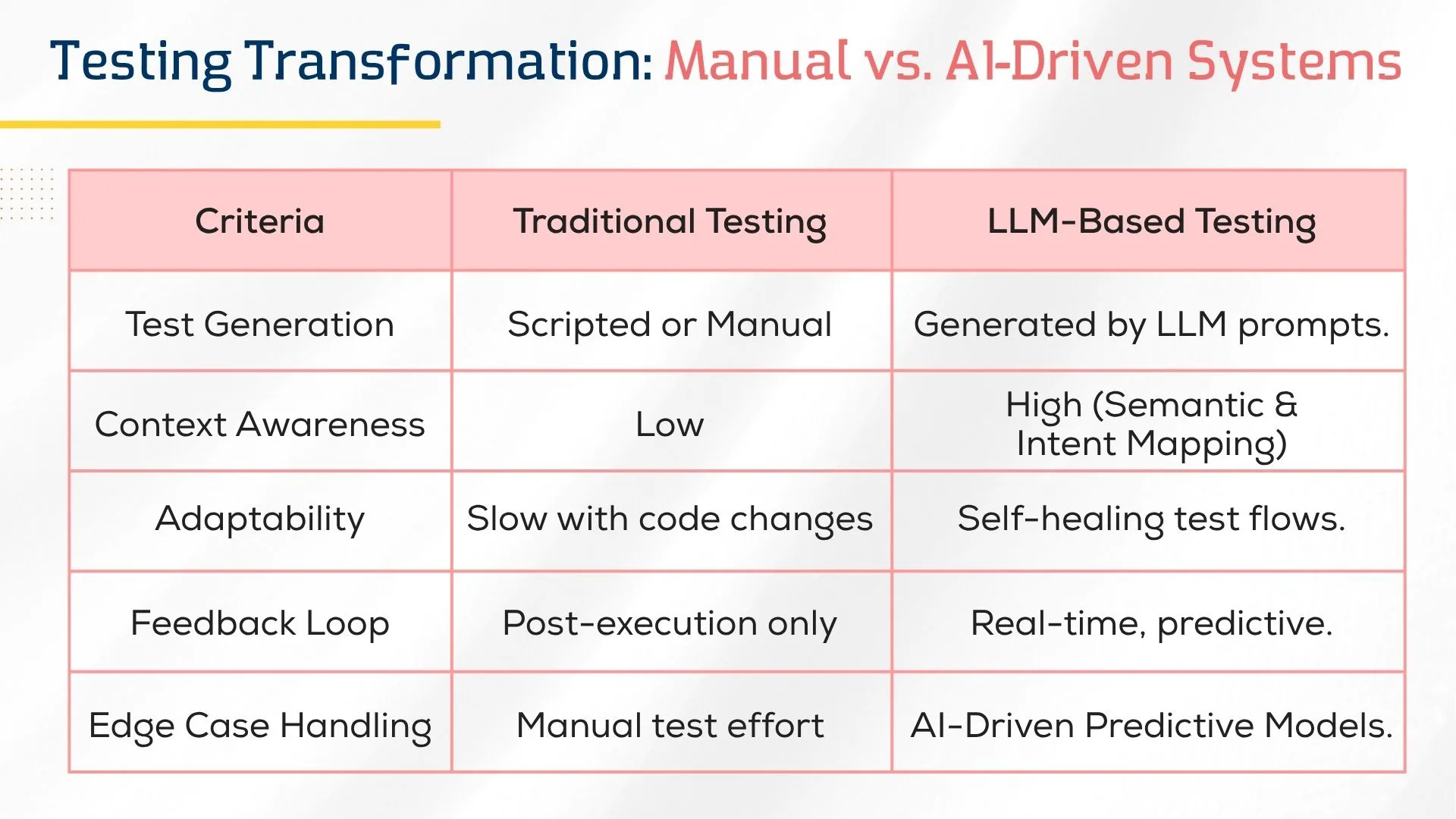

Key Differences Between Traditional and LLM Testing Approaches

Traditional vs. LLM testing methods vary significantly in execution, scalability, and intelligence. While both aim for high software quality assurance, their tools, workflows, and outcomes differ.

Key Differences:

- Testing Tools Used: Traditional QA relies on manual testing tools and software testing tools, while LLM approaches utilize AI-powered testing tools for automated software testing.

- Intelligence & Context: LLM testing applies AI algorithms and entity recognition for dynamic test generation and contextual translation, improving customer support automation.

- Scalability: LLM models support self-healing automation, enabling faster updates in DevOps pipelines and complex software testing services.

- Test Accuracy: LLMs handle edge cases better using neural network architecture and real-time predictive analysis, enhancing ROI and test efficiency.

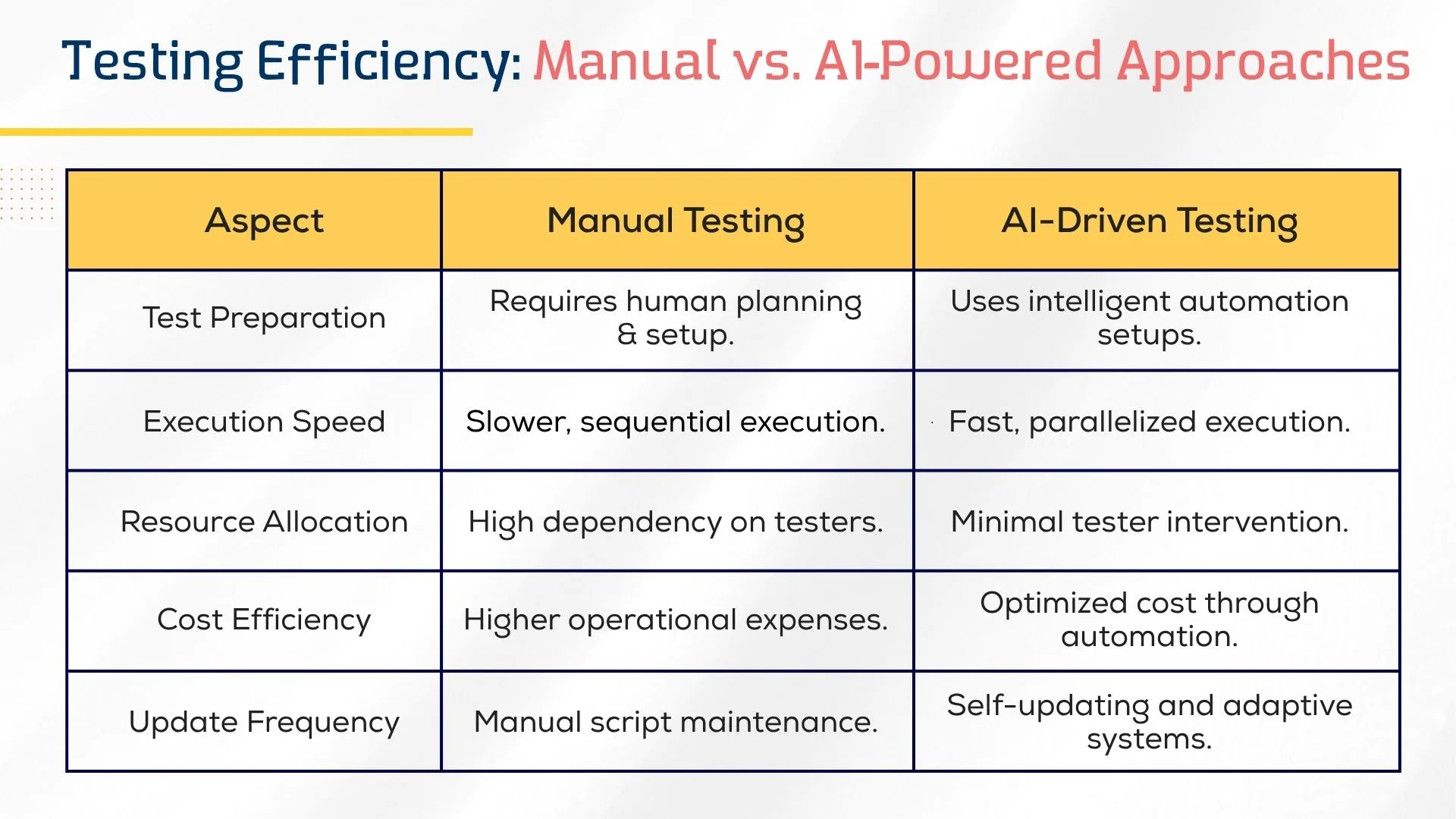

Time and Cost Comparison: Manual vs. AI-Driven Testing

Comparing manual testing with AI-driven test automation reveals critical differences in time and cost efficiency throughout the QA lifecycle. Traditional manual software testing often requires extensive tester involvement, leading to longer execution cycles, delayed feedback loops, and increased QA costs. These delays can slow down releases, especially when handling complex systems or multiple test environments.

In contrast, AI testing tools powered by LLM AI and transformer-based models streamline the process by reducing manual effort and execution time through automated software testing and workflow automation. These tools not only accelerate delivery but also provide consistent cost optimization, making them ideal for scaling in agile projects.

Modern AI automation testing approaches also shine in detecting edge cases, executing rapid software unit testing, and supporting test scalability across DevOps pipelines. Enhanced capabilities like self-healing automation and regression suite optimization help reduce maintenance and ensure smoother releases.

By automating repetitive and complex testing processes, teams boost Quality Assurance performance and significantly cut down on time-to-market. The return on investment becomes notably higher when adopting LLM models, especially in fast-paced, continuous deployment environments.

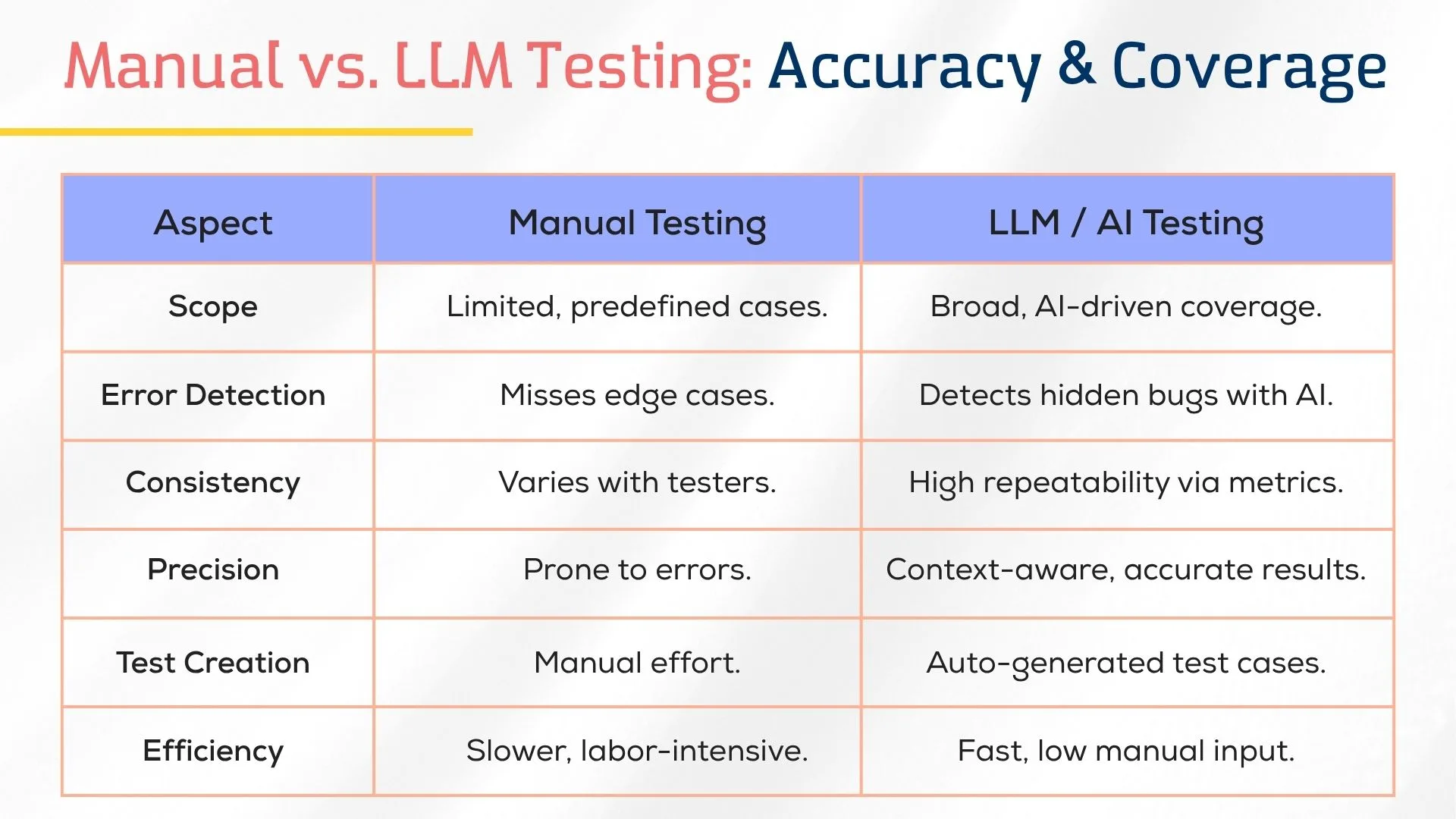

Accuracy and Coverage: Which Method Performs Better?

Accuracy and test coverage are crucial when evaluating manual testing versus AI-driven testing methods. While traditional QA offers control, AI in software testing provides depth, precision, and scalability.

- Error Detection: Manual testing often overlooks edge cases, whereas AI-powered testing tools utilize AI algorithms to identify hidden bugs and logic flaws.

- Coverage Scope: LLMs analyze large-scale content clusters and support automated test generation for complex user interactions.

- Consistency & Metrics: AI tools utilize OpenAI’s Eval library, BLEU score, and transformer-based models to ensure repeatable, data-driven accuracy.

- Efficiency: Software qa testing powered by AI automation tools delivers broader coverage with less manual effort, improving long-term ROI.

Test Automation Potential with LLMs

LLM AI is revolutionizing test automation by enabling faster, smarter, and more scalable QA workflows. With AI automation integrated into software testing tools, LLMs generate dynamic test cases, reducing reliance on manual testing tools.

These LLM models utilize transformer-based deep learning models and self-attention mechanisms to understand natural language, enabling the automation of complex software unit testing scenarios.

LLMs support automated qa testing tools, streamlining regression suite optimization and minimizing human error. Their capability to detect semantic similarity, handle vision and audio understanding, and drive AI-powered testing tools unlocks potential for intelligent, context-aware testing processes. This advancement positions LLMs at the core of AI automation testing, driving innovation across software pipelines and quality assurance strategies.

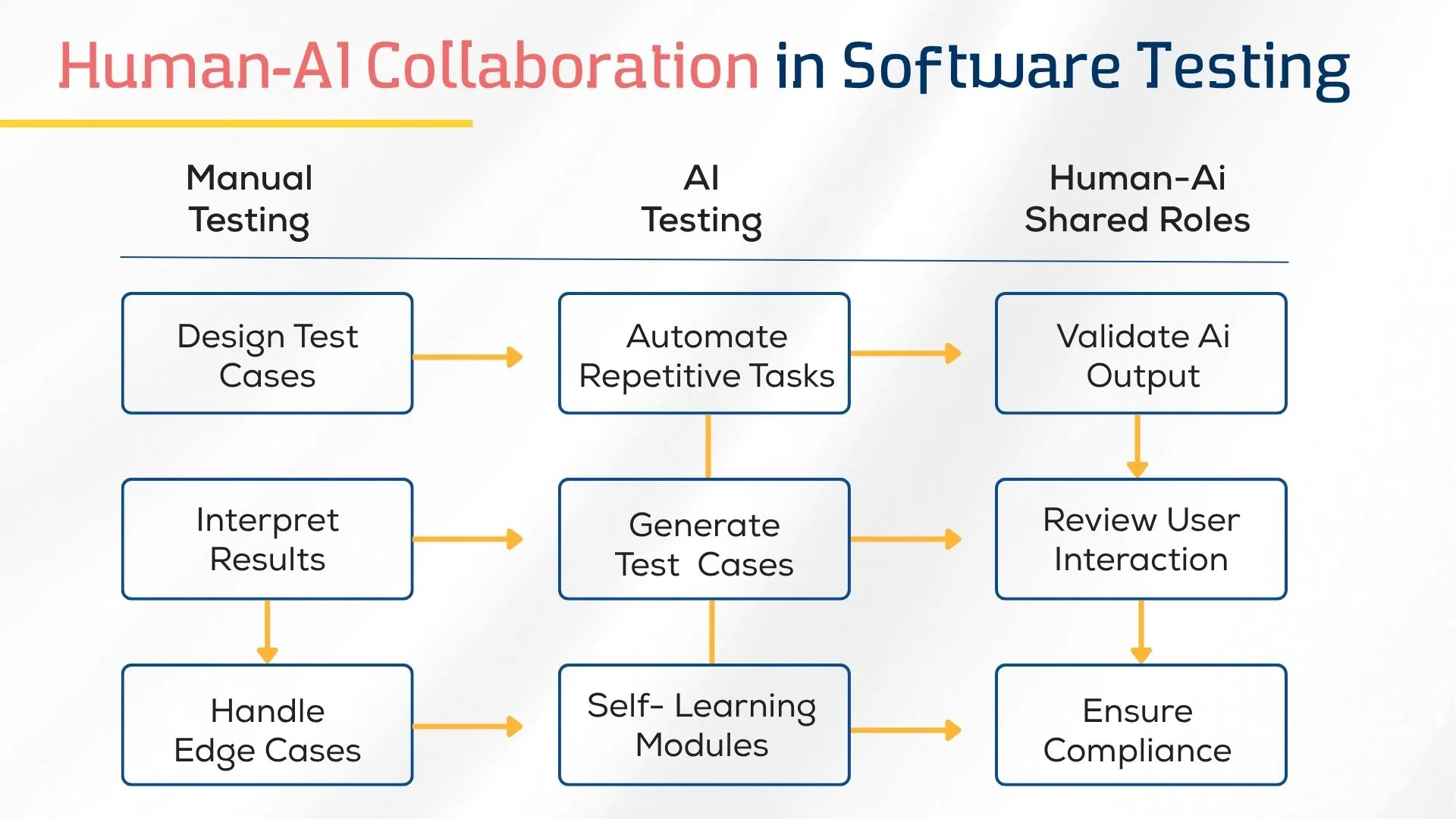

Human Involvement in Both Testing Methods

Despite advancements in AI automation testing, human involvement remains critical in both manual software testing and LLM-driven automation. In traditional software QA testing, human testers are responsible for designing test cases, interpreting results, and handling edge cases that require domain knowledge and intuition.

Meanwhile, AI in testing reduces repetitive tasks but still relies on QA professionals for supervising automated testing tools, validating AI-powered testing tool outputs, and guiding automated test generation.

Testers are essential for reviewing user interactions, improving testing processes, and training LLM AI systems using real-world feedback. Even with transformer-based models, human oversight ensures better alignment with business logic, product features, and compliance needs in critical domains like financial risk models and legal document analysis.

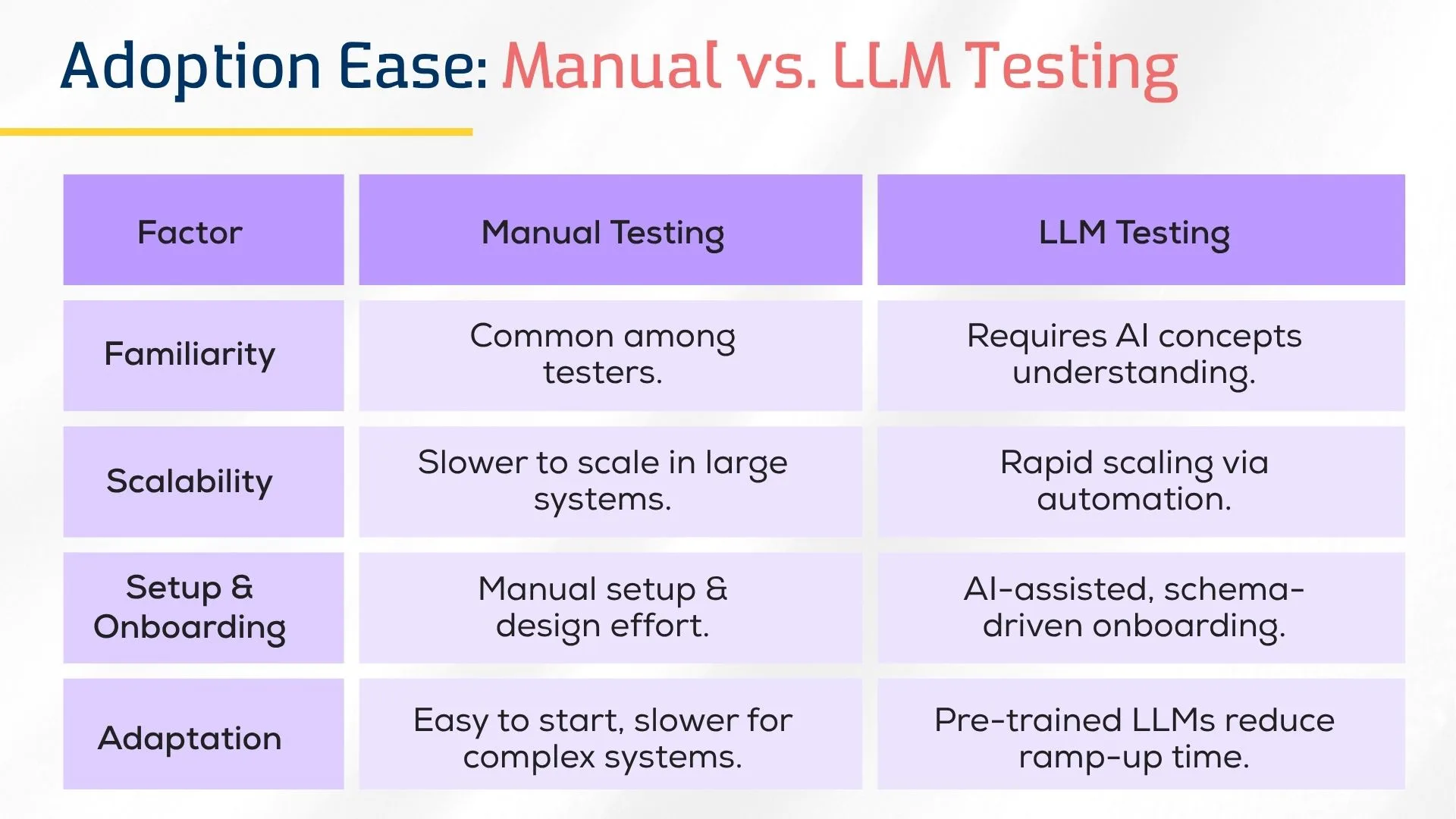

Learning Curve: Which Testing Approach Is Easier to Adopt?

Adopting a new testing method depends heavily on the learning curve for QA teams. Both manual testing and LLM-driven testing offer unique challenges and advantages when it comes to ease of adoption.

Learning Curve Factors:

- Manual Testing: Familiar to most testers and supported by widely used manual testing tools, but it becomes complex in large software testing service environments.

- LLM Testing: Requires understanding of LLM models, AI testing tools, and transformer-based deep learning models, but enables faster onboarding via schema generators and workflow automation.

- Training & Adaptation: LLMs leverage Common Crawl and Google’s AI Mode, offering pre-trained knowledge that reduces onboarding time for AI-powered testing tools in modern QA pipelines.

ROI Metrics for Evaluating Testing Performance

Modern software testing demands a balance between speed, cost, and quality. While manual software testing using traditional testing tools increases tester effort and slows releases, AI automation testing powered by LLM AI and transformer-based models drives scalability and speed. AI-powered testing tools like Hugging Face support regression testing, sentiment analysis, and natural language processing for smarter decision-making.

AI-driven test automation frameworks significantly improve software QA testing by reducing human effort, enhancing bug detection, and aligning with regulatory compliance standards.

Key Metrics & ROI Highlights

- 30%+ improvement in early bug detection across DevOps pipelines

- 40–60% cost savings vs. manual testing using AI testing tools

- Execution speed is 3x faster through parallel test automation frameworks

- Higher customer satisfaction via predictive analysis and continuous deployment

- Long-term ROI growth through large language model optimization and smarter edge case coverage

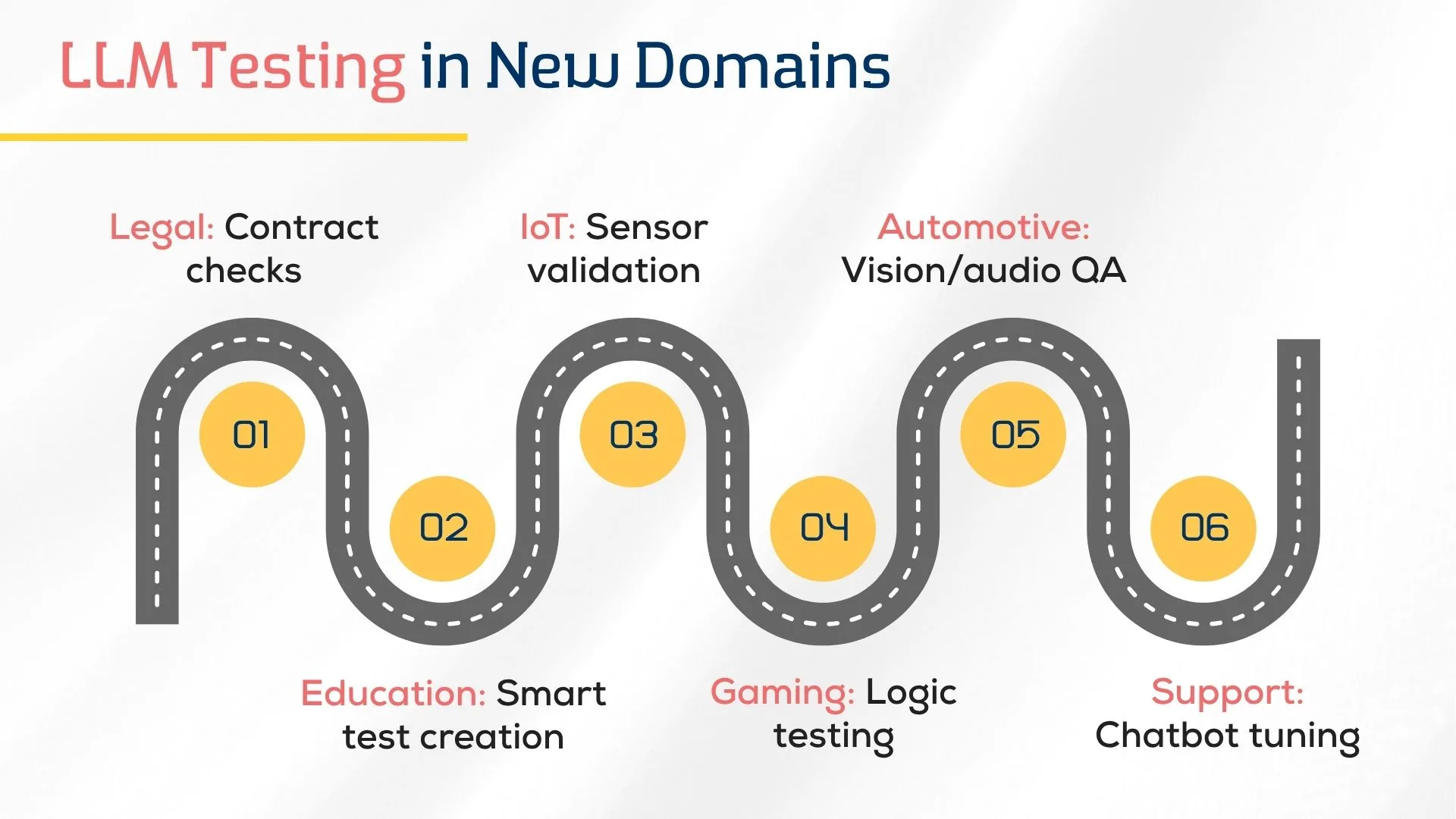

Real-World Use Cases of LLM-Based Testing

LLM-based testing is transforming software QA testing by enabling intelligent automation at scale. By leveraging AI automation tools and large language model optimization, organizations can enhance testing speed, accuracy, and compliance.

Below are some impactful real-world use cases:

- Healthcare Application Testing: LLMs are used to analyze patient symptoms, training data, and regulatory compliance standards. This enables automated generation of test scenarios for better software validation in medical systems.

- Finance and Wealth Management Platforms: AI testing tools apply sentiment analysis and financial risk models to evaluate stability under varying market conditions. This improves predictive testing and reduces failures.

- E-commerce Platform Optimization: Multimodal large language models assist in adapting tests for UI changes and user interactions, improving performance and overall return on investment.

- Video Game Design & Testing: Transformer-based deep learning models automate complex logic and performance validation in immersive game environments, ensuring quality assurance across platforms.

- Legal Document Analysis: Natural language understanding (NLU) enables testing tools to verify contract clauses, legal compliance, and risk exposure through contextual translation and entity recognition.

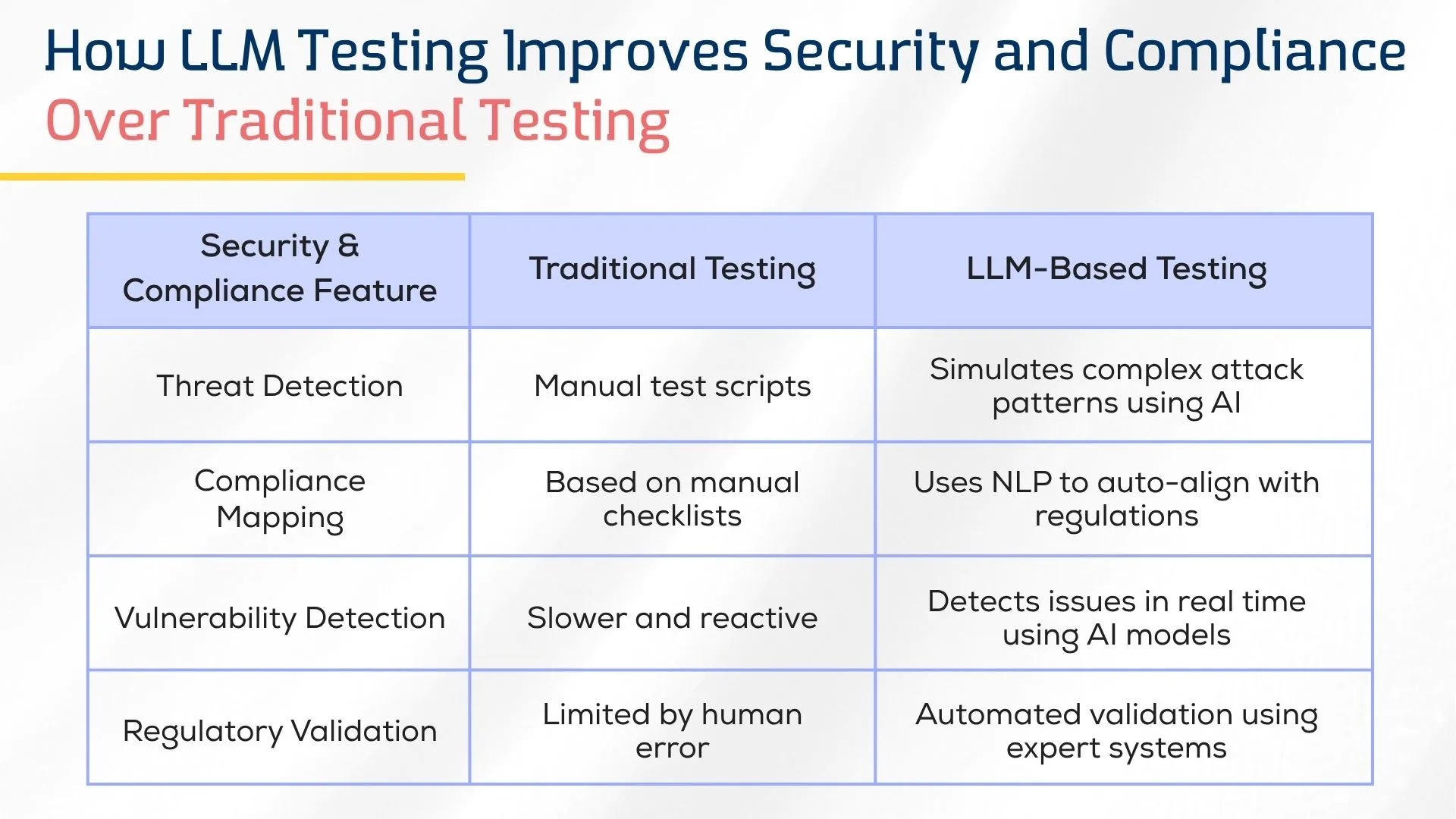

Security and Compliance in LLM vs. Traditional Testing

Ensuring security and regulatory compliance is crucial in any software testing strategy. Traditional testing relies on predefined test data and manual testing tools, which may lack scalability for modern cybersecurity threat detection. LLM-driven testing enhances this by leveraging AI automation testing and natural language understanding to simulate complex attack vectors and data breaches.

AI-powered testing tools like Hugging Face models and Generative AI help detect vulnerabilities faster through automated QA testing tools. These models support regression testing, natural language processing, and test automation frameworks to ensure accurate validation against regulatory standards.

- Hugging Face models use NLP for real-time vulnerability and cybersecurity threat detection.

- Generative AI creates attack scenarios to boost AI automation testing accuracy.

- Predictive analysis enhances regression testing by identifying potential data breaches.

- AI-powered testing tools automate compliance with GDPR and ISO 27001 standards.

- Expert systems with multimodal LLMs strengthen security validation in QA testing.

Challenges in Adopting LLM-Driven Testing

While LLM AI is revolutionizing software testing, its adoption presents unique challenges for teams transitioning from manual software testing to AI automation testing.

Challenge: Lack of domain-specific training data limits the accuracy of LLM testing models.

Solution: Leveraging Generative AI and AI-powered search engines helps customize test data sets and improve contextual accuracy through machine learning.

Challenge: Ensuring regulatory compliance across industries.

Solution: Advanced natural language understanding and integration with OpenAI's Eval library enable automated validation against legal and compliance standards.

Challenge: Complexity in integrating with existing test automation frameworks.

Solution: Tools supporting hierarchical headings and multimodal large language models streamline the process and enhance UI changes monitoring within software pipelines.

Conclusion: Which Testing Approach Yields Better ROI?

Choosing between manual software testing and LLM-driven AI testing depends on the testing scope, automation maturity, and organizational goals. However, AI automation tools and LLM models consistently demonstrate superior ROI through faster release cycles, higher test coverage, and reduced QA testing services cost.

By leveraging natural language processing, automated test generation, and AI-driven test automation, teams can streamline regression testing and improve software quality assurance.

Additionally, AI testing tools powered by neural network architecture and machine translation enable scalable, multilingual testing ideal for global applications. With support from platforms like Hugging Face and Google’s AI Mode, LLM evaluation ensures better ROI, particularly in complex, dynamic environments like e-commerce platforms and customer support automation systems.

Frugal Testing is a leading software testing company in Hyderabad offering AI-driven test automation services, functional testing services, and load testing services at competitive pricing.

People Also Ask

1. Is LLM-based testing compliant with industry regulations?

Yes, LLM-based testing can align with regulatory compliance standards when combined with secure AI testing tools and validated automation frameworks.

2. When to use a data-driven framework?

Use a data-driven framework when test scenarios require multiple input variations to ensure robust coverage and accurate software testing results.

3. How do traditional testers transition to using LLM-based tools?

Traditional testers can adopt LLM-based tools by learning natural language prompts, exploring automation testing tools, and gradually integrating AI-driven test cases.

4. Are traditional testing methods better for exploratory testing?

Yes, manual software testing methods often provide greater flexibility and insight for exploratory testing, especially in ambiguous test scenarios.

5. Do LLM-based tools support integration with CI/CD pipelines?

Absolutely, most modern LLM-based testing tools are designed to integrate seamlessly with CI/CD pipelines to support continuous testing and delivery.

%201.webp)