Desktop and cross-platform applications remain at the core of many mission-critical workflows. From engineering suites on Windows 10 and Windows 11 to creative tools on macOS, ensuring rock-solid functionality, performance, and security is non-negotiable.

In this deep dive, we’ll explore software testing and QA best practices for 2025, covering both automated and manual approaches, integrating them into Continuous Integration workflows, reviewing popular test-automation tools, and outlining the software testing life cycle. Let’s dive in.

💡Key Insights from this blog

📌 Explore the top desktop app testing tools of 2025.

📌 Learn best practices for automation, manual testing, CI/CD, and quality assurance in desktop environments.

📌 Understand each phase of the software testing life cycle, from planning to test closure.

📌 Gain valuable insights on testing apps across different platforms.

What is a Desktop Application

A desktop application is software installed and run on a local system, using the device's resources. Unlike web or mobile apps, desktop apps aren’t confined to a specific domain; they serve a wide range of purposes, from photo editing (e.g., Adobe Photoshop) to software development (e.g., IntelliJ).

Despite the rise of web and mobile apps, many users still prefer desktop applications. Here's why:

- System-Level Access: Desktop apps can interact deeply with the operating system, enabling powerful features like custom processes.

- Offline Capability: Most modern desktop apps offer core features without needing constant internet access.

- Performance Powerhouse: Ideal for resource-heavy tasks like video editing or gaming due to direct access to system memory and CPU.

- Enhanced Security: Data is stored locally, reducing cloud-based vulnerabilities and exposure to online threats.

- Greater Stability: Desktop apps are less affected by frequent changes (e.g., browser updates), ensuring a more consistent experience.

For enterprise solutions and professional-grade software, desktop applications remain a reliable and efficient choice.

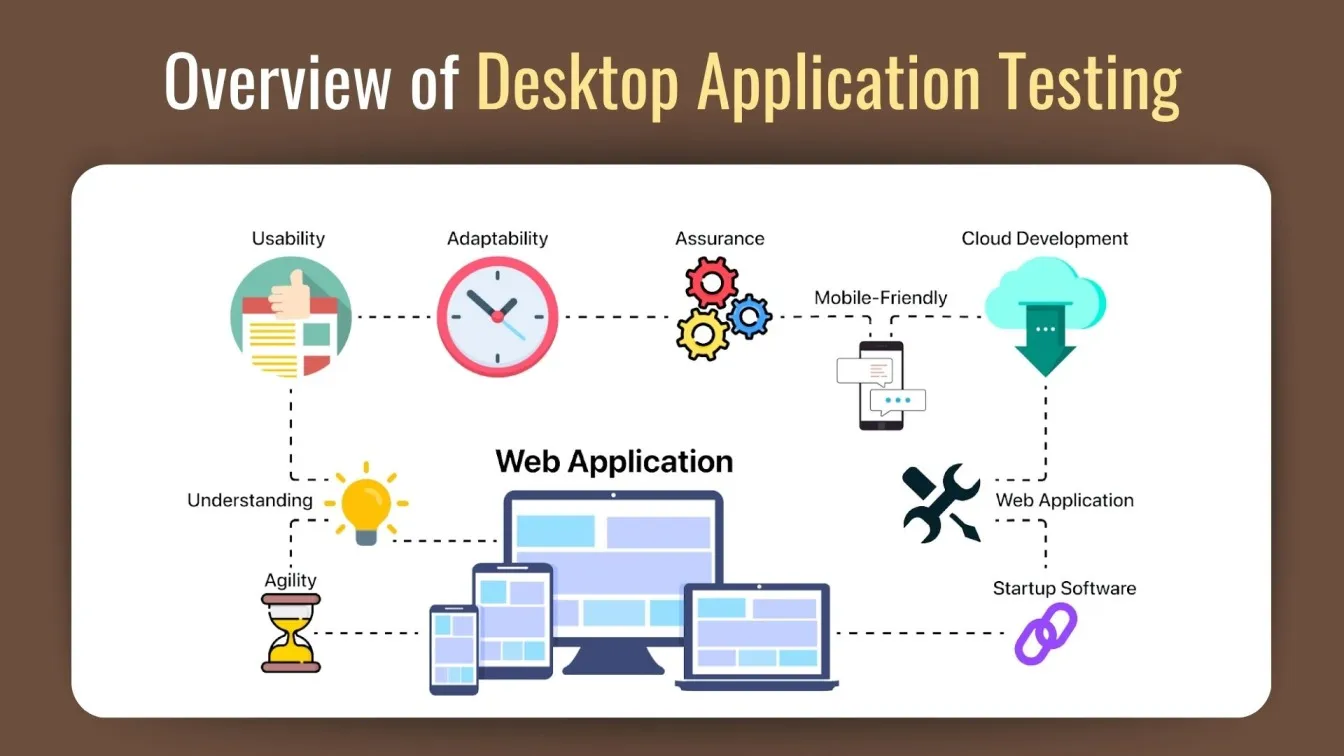

What Is Desktop Application Testing?

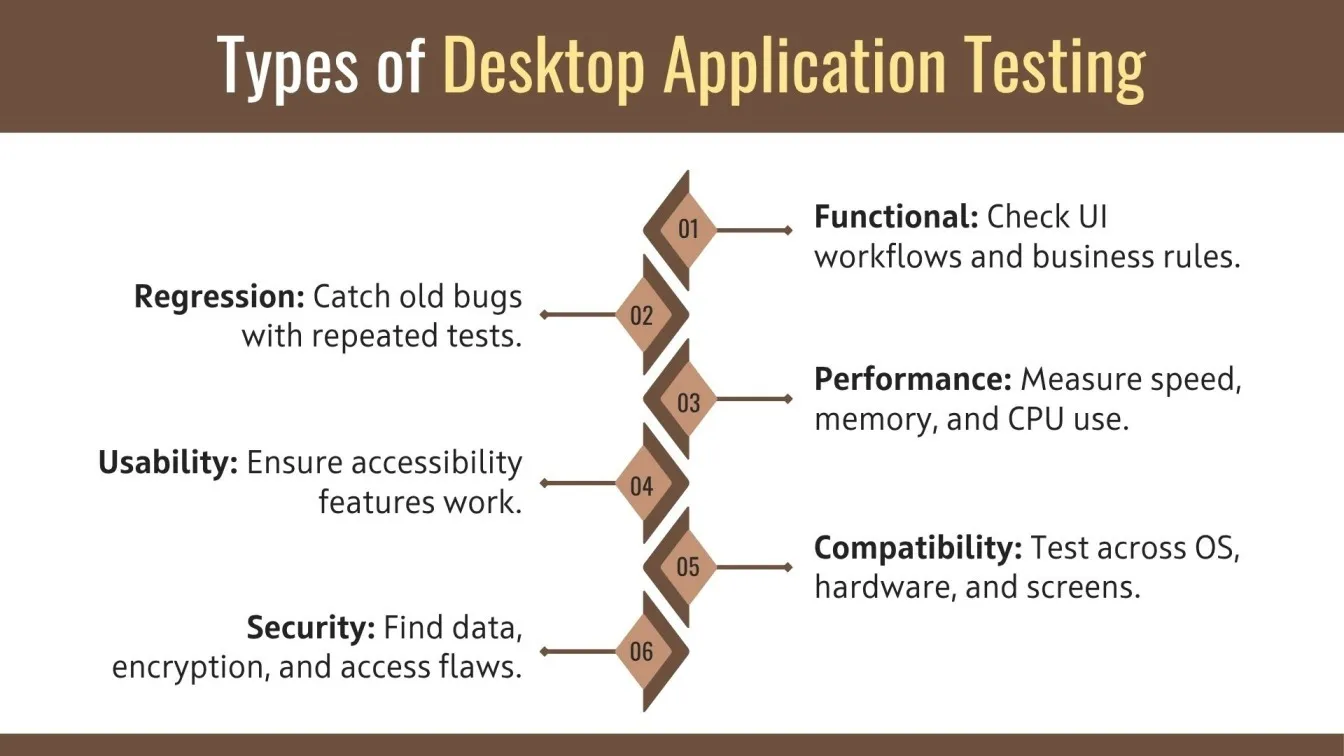

At its core, application testing (or software application testing) for desktop means validating functionality, performance, security, and usability of native programs on an operating system - whether Windows, Windows 10, Windows 11, or macOS. It encompasses:

- Functional Testing: Verifying UI workflows, menus, dialogs, file operations, and business rules.

- Performance Testing: Measuring startup time, memory usage, CPU spikes, and responsiveness.

- Compatibility Testing: Ensuring consistent behavior across multiple OS versions, hardware profiles, and screen resolutions.

- Security Testing: From application security testing to dynamic application security testing (DAST) and application penetration testing, detecting vulnerabilities in local data handling, encryption, and privilege controls.

- Usability & Accessibility: Confirming that keyboard navigation, screen readers, high-contrast themes, and other accessibility features work as intended.

- Regression Testing: Preventing reintroduction of old bugs with robust automation testing and manual regression suites.

By embedding these types of application testing early in the development cycle, teams turn findings into actionable insights, elevating both user experience and overall product quality.

Key Differences Between Desktop, Web, and Mobile Testing

Testing approaches vary significantly across desktop, web, and mobile platforms due to differences in environments, tools, UI frameworks, and performance concerns. Here's a quick comparison to highlight what sets each apart.

Desktop software testing demands deeper technical expertise, especially for application testing software that interacts with the file system, drivers, and local services, compared to web or mobile.

Manual vs. Automated Desktop Testing: Pros and Cons

Choosing between manual and automated desktop testing depends on the project’s goals, complexity, and testing frequency. While manual testing offers human insight, automated testing brings speed and scalability. A balanced approach often yields the best results - using manual testing for new features and UX checks, and automation for regression and performance testing.

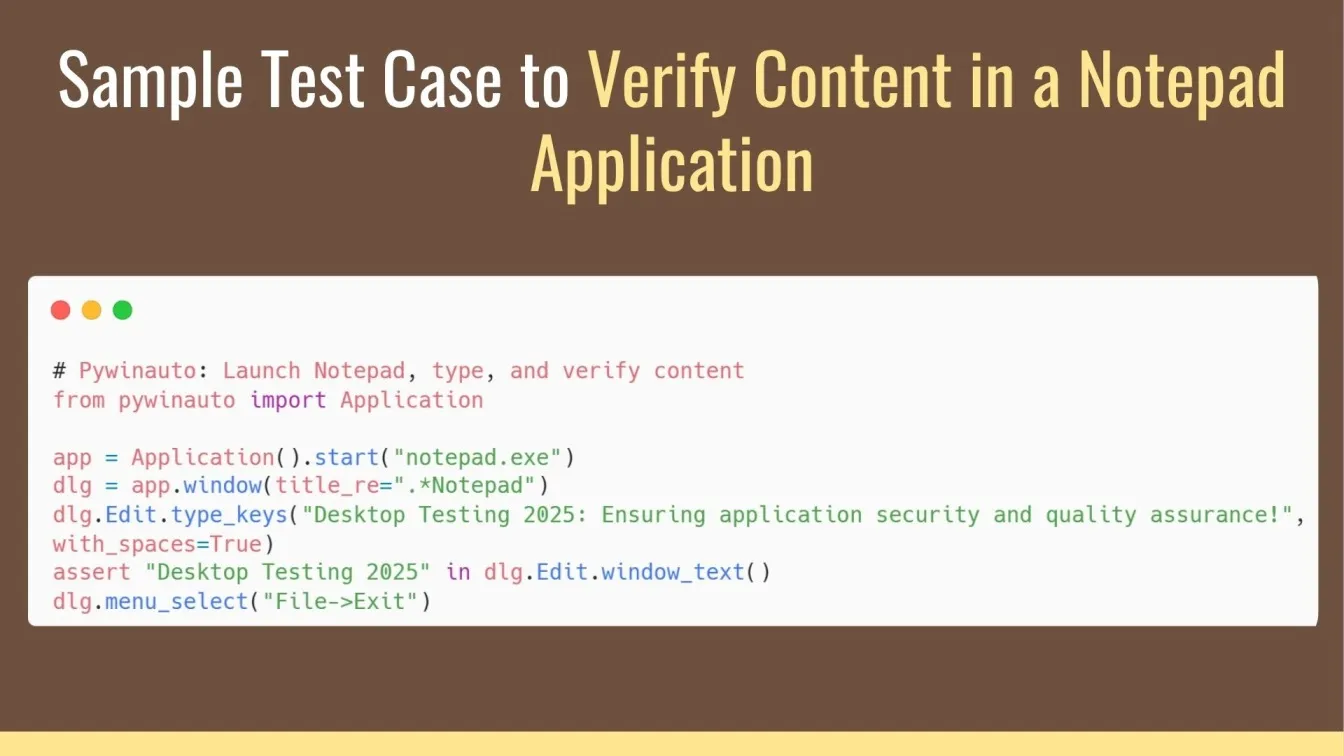

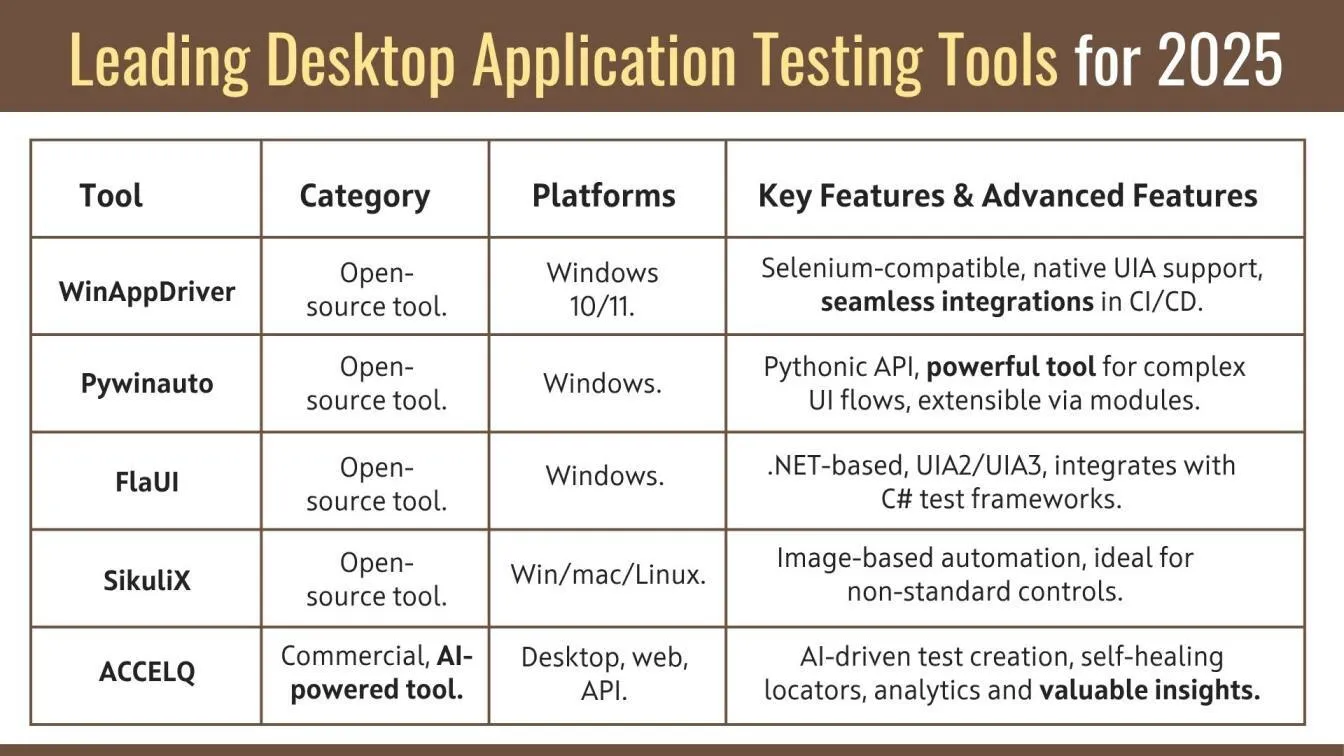

Top Desktop Application Testing Tools in 2025

Here’s a wide range of leading software testing tools, from open-source tools to AI-powered tools and enterprise platforms. Each maps to different project requirements, budgets, and technology stacks.

Having a mix of open-source tools and commercial suites allows teams to meet project requirements while controlling costs and leveraging automation testing services.

The Process of Desktop Application Testing

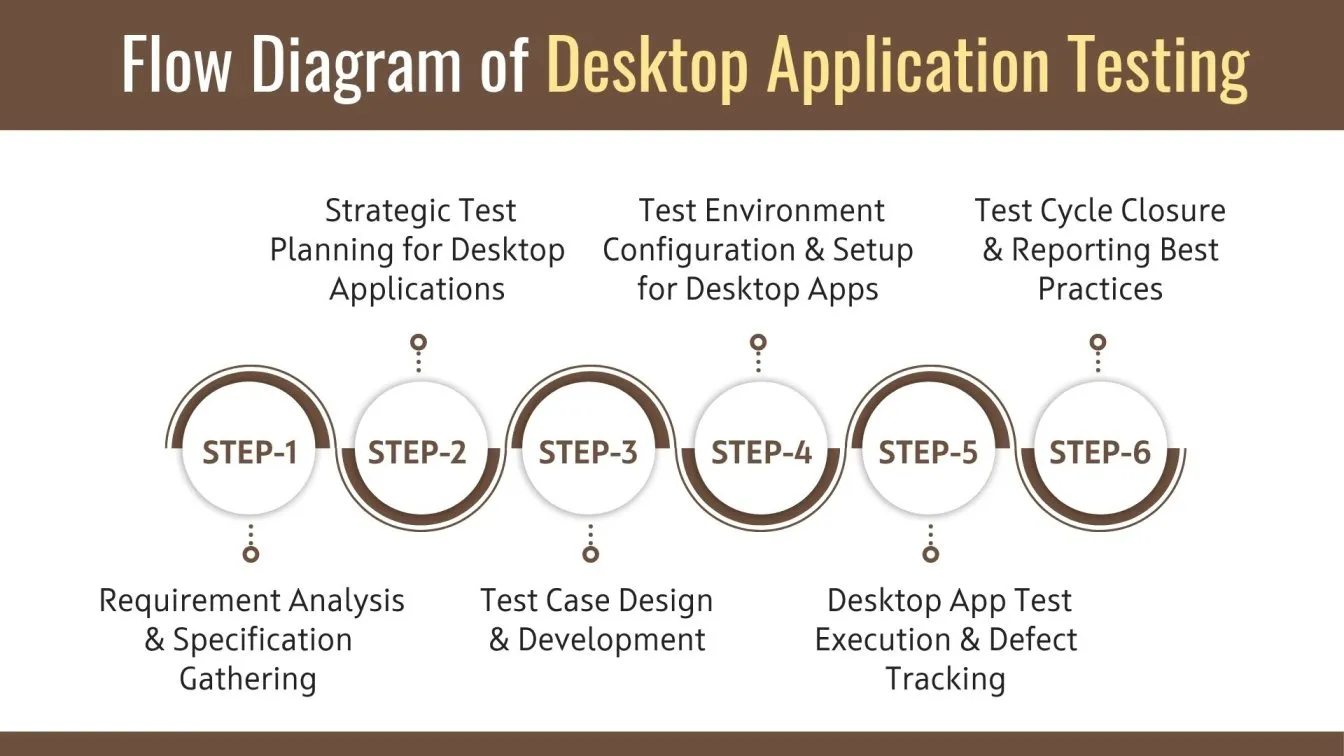

Desktop application testing involves a structured approach to ensure stability, performance, and usability. By integrating Continuous Integration, automation testing, and thorough documentation, teams can streamline the process and catch issues early. Here's a six-stage software testing life cycle tailored specifically for desktop applications:

Stage 1: Requirement Analysis & Specification Gathering

Gather and document key functional and non-functional requirements, define scope, and set entry criteria.

- Functional Specs & UI Design: Catalog key features, user stories, and mockups.

- Non-functional Needs: Define performance thresholds, accessibility goals, and application security testing requirements (including application pen testing).

- Define Scope & Entry Criteria: Identify modules, third-party dependencies, file I/O paths, and supported OS versions.

Stage 2: Strategic Test Planning for Desktop Applications

Develop a comprehensive test strategy, choose tools, assign resources, schedule cycles, and identify risks.

- Test Strategy Document: Outline types of software testing—unit, integration, system, regression, DAST, and pen testing.

- Tool & Environment Matrix: Map automation testing tools to stacks (e.g., WPF → TestComplete; Qt → SikuliX).

- Resource & Timeline: Assign quality assurance specialists and quality assurance engineers, schedule test cycles in sprints, and integrate with the development cycle.

- Risk Assessment: Identify high-risk areas (installers, licensing, auto-update) for early dynamic application security testing.

Stage 3: Test Case Design & Development

Create detailed, maintainable test cases and automate security tests using data-driven frameworks.

- Test Case Template: Include ID, description, preconditions, steps, expected results, and priority.

- Data-Driven & Keyword-Driven Frameworks: Store project requirements in external data files (CSV, JSON).

- Page-Object & Adapter Patterns: Encapsulate UI actions in classes for maintainability.

- Security Test Scripts: Automate DAST scans with OWASP ZAP, integrate application penetration testing shells.

Stage 4: Test Environment Configuration & Setup for Desktop Apps

Prepare clean test environments with necessary runtimes, drivers, and CI/CD pipelines for reliable testing.

- Infrastructure as Code: Use Vagrant, Docker (for Linux GUI), and Hyper-V to spin up clean VMs on Windows 10/11.

- Install Prerequisites: .NET runtimes, Java, database clients, fonts, and drivers.

- Seed Test Data: Local DB snapshots, config files, and user profiles per project requirements.

- CI/CD Pipelines: Jenkins, Azure DevOps, or GitHub Actions with dedicated desktop test agents.

Stage 5: Desktop App Test Execution & Defect Tracking

Execute automated and exploratory tests, collect artifacts, and track defects with clear logging and management.

- Automated Suites: Schedule nightly automation testing runs covering functional, performance, and security tests.

- Manual Exploratory Testing: Empower QA engineers to explore edge cases and types of software testing, like usability and compatibility.

- Capture Artifacts: Screenshots, event logs, memory dumps for post-mortem actionable insights.

- Defect Logging & Management: Use Jira, Azure Boards, or TFS. Tag issues by severity, area, and OS link to test scripts for traceability.

Stage 6: Test Cycle Closure & Reporting Best Practices

Verify completion criteria, analyze metrics, conduct retrospectives, and update documentation for continuous improvement.

- Exit Criteria Review: Confirm all critical/high defects resolved, DAST reports passed, and regression suite green.

- Metrics & Dashboards: Track test coverage, pass/fail rates, defect density, DAST vulnerability counts.

- Retrospective: Identify successes, pain points, and plan improvements for the next sprint.

- Knowledge Transfer: Update the software testing life cycle documentation, share actionable insights with stakeholders, and refine test frameworks.

Best Practices for Effective Desktop Application Testing

To ensure reliable, secure, and high-performing desktop applications, teams should adopt a strategic mix of early QA involvement, automation, and continuous improvement. The following best practices help streamline testing efforts, reduce bugs, and deliver quality at speed.

- Embed QA Early in the Development Cycle: Shift-left principles reduce late-stage surprises.

- Invest in Automation First: Implement automation testing tools (e.g., WinAppDriver, Selenium Automation Testing for embedded webviews).

- Balance Manual & Automated Testing: Use manual runs for UX/Edge-case discovery; automation for regression and DAST.

- Integrate Security Testing: Include both static and dynamic application security testing in pipelines; schedule application pen testing at key milestones.

- Adopt Continuous Integration: Gate merges on build, unit tests, and automated desktop tests - fail fast, fix fast.

- Parallelize Across Environments: Run tests on Windows 10, Windows 11, and macOS in parallel to accelerate feedback.

- Leverage Metrics & Dashboards: Surface actionable insights on test health, flakiness, and vulnerability trends.

- Maintain Your Test Suites: Regularly review and refactor test scripts to remove flaky or obsolete cases.

Challenges in Desktop Application Testing and How to Overcome Them

Desktop testing comes with unique hurdles from OS pop-ups to hardware dependencies - that can impact test stability and coverage. Here are common challenges teams face and practical solutions to overcome them effectively.

- OS Pop-Ups & Permission Prompts: These disrupt automated tests and require manual handling.

Solution: Use tools like AutoIt or SikuliX to script interactions and handle elevation prompts. - UI Flakiness from Animations & Transitions: UI delays can cause test failures or timing issues.

Solution: Disable animations in system settings or add explicit wait/sync points in test scripts. - Complex Installer & Updater Workflows: Testing installers and updates manually is time-consuming and error-prone.

Solution: Automate MSI/DMG installations in clean VMs and verify updates using smoke tests. - Hardware & Driver Dependencies: Tests may behave differently across hardware setups or with specific drivers.

Solution: Maintain a hardware compatibility matrix, use virtualized drivers, and run CI/CD-based compatibility tests. - Integrating Security into DevOps (DevSecOps): Security is often siloed or delayed until late stages.

Solution: Embed automated DAST (e.g., OWASP ZAP), SAST (e.g., SonarQube), and application pen testing into your pipelines.

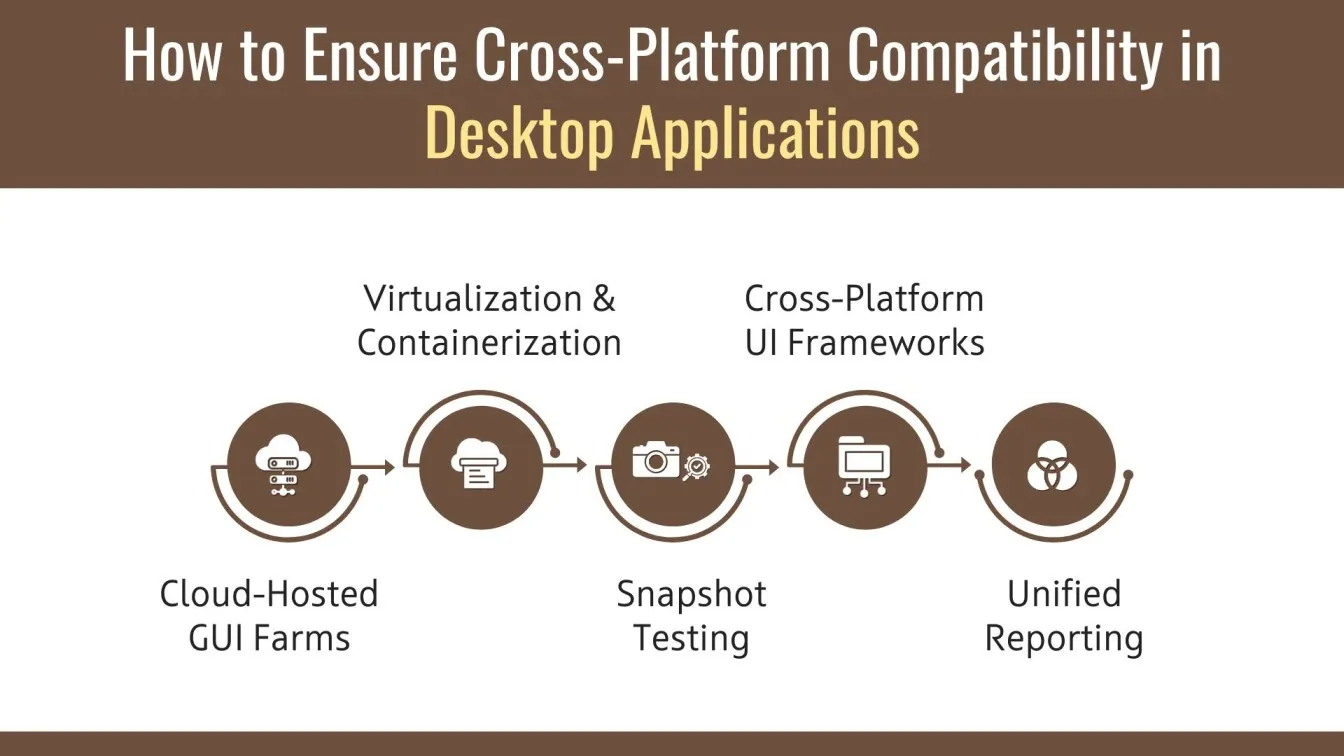

Ensuring Cross-Platform Compatibility in Desktop Applications

With desktop apps running across multiple operating systems, ensuring consistent behavior is essential. Cross-platform testing strategies like virtualization, unified frameworks, and cloud-hosted environments help validate UI, functionality, and performance across Windows, macOS, and Linux efficiently.

- Virtualization & Containerization: Test on fresh VMs for Windows 10, Windows 11, macOS, and popular Linux distros.

- Cross-Platform UI Frameworks: Leverage Electron, Qt, or JavaFX and unified selectors in your automation.

- Snapshot Testing: Use tools like SikuliX or Squish to perform pixel-level comparisons.

- Cloud-Hosted GUI Farms: BrowserStack App Automate for macOS GUI, AWS Device Farm for Linux desktops.

- Unified Reporting: Aggregate results from Windows, macOS, and Linux into a single dashboard.

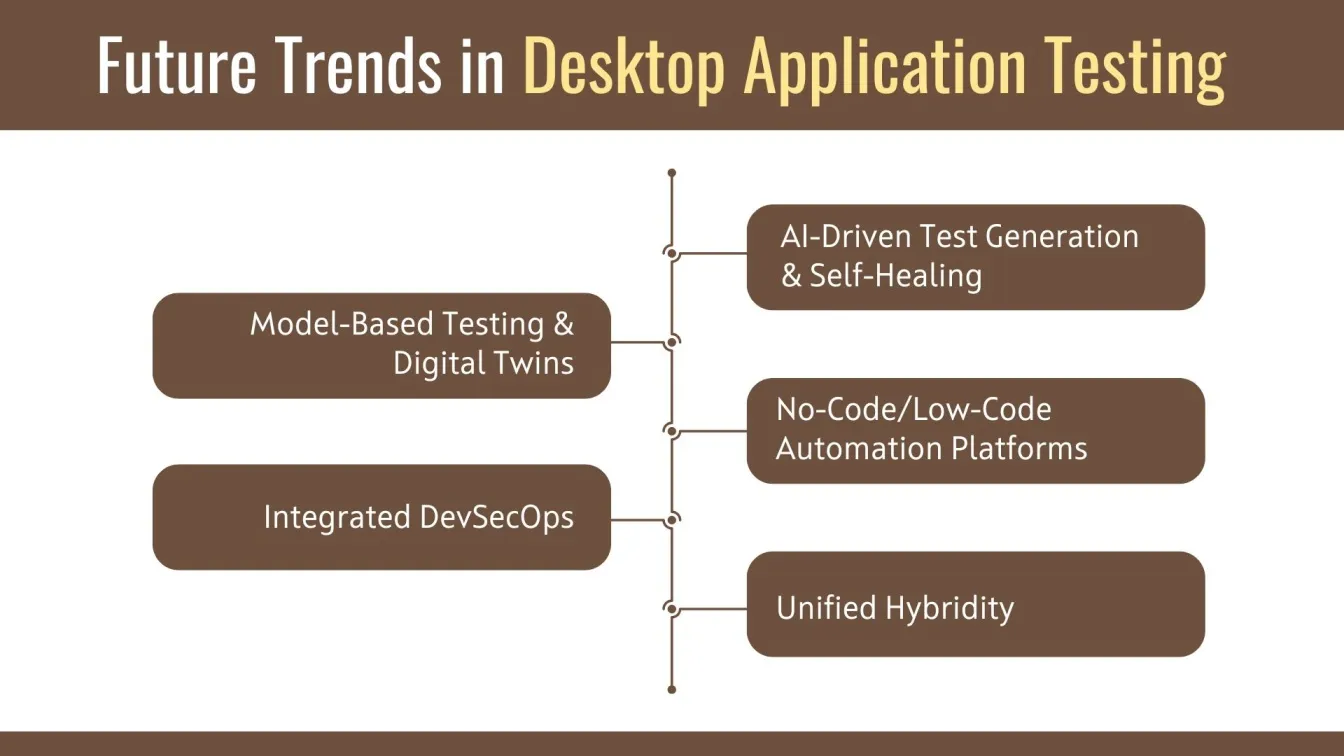

Future Trends in Desktop Application Testing

As desktop applications evolve, so do the testing approaches. Emerging trends like AI-driven automation, model-based testing, and no-code platforms are reshaping the landscape—making testing faster, smarter, and more accessible. Security and cross-platform testing are also becoming more integrated than ever.

- AI-Driven Test Generation & Self-Healing: AI-powered tools that generate and maintain test scripts based on user telemetry and code changes.

- Model-Based Testing & Digital Twins: Auto-generate test cases from UML diagrams and live application models.

- No-Code/Low-Code Automation Platforms: Empower non-technical stakeholders with visual test builders.

- Integrated DevSecOps: Security testing - including dynamic application security testing and application penetration testing - embedded seamlessly in CI/CD.

- Unified Hybridity: One platform to test desktop, web, and mobile applications together, reusing scripts and data.

Wrapping it Up

Desktop software testing in 2025 is more expansive than ever - spanning functional, performance, security, and UX checks across multiple operating systems. By combining manual and automation testing, integrating quality assurance services, and embedding application security testing (including DAST and pen testing) into your software testing life cycle, you can deliver high-quality, secure desktop applications on Windows 10/11 and macOS.

Select a mix of open-source tools and commercial suites each offering key features, Advanced features, and seamless integrations to meet your project requirements, gain valuable insights, and turn them into actionable insights for continuous improvement.

People also Asked

What is the difference between OWASP Zap and Burp Suite?

OWASP ZAP is a free, open-source security scanner ideal for beginners, while Burp Suite is a more advanced, commercial tool with comprehensive features for professional penetration testers. Burp Suite generally offers more powerful automation and extensibility compared to ZAP.

What is difference between white box testing and black box testing?

White Box Testing: Examines internal code, logic, and structure (unit tests, coverage).

Black Box Testing: Focuses on external functionality without internal visibility (UI, functional tests).

What is the 3 stage life cycle?

The 3 Stage Life Cycle are Test Planning, Test Design & Execution, and Test Reporting & Closure.

What are the 4 stages of a bug?

The four stages of a bug are New, Assigned, Fixed, and Closed. A bug starts as New when reported, then gets Assigned to a developer, Fixed after resolution, and finally Closed once verified and confirmed.

What is application testing vs data testing?

Application Testing: Verifies software functionality, UI/UX, performance, and application security.Data Testing: Validates data integrity, ETL processes, database accuracy, and data pipelines.

%201.webp)