A well-structured Performance Testing Plan Template is essential for ensuring the optimal performance of your software under various conditions. This high-level document serves as a strategic project plan, guiding every stage of the development process. It includes clear step guides for defining testing objectives, selecting appropriate test scenarios, and identifying tools such as JMeter load testing or LoadRunner online. A well-defined test plan helps detect potential performance issues early and ensures focus on testing performance optimisation aspects. By applying common testing techniques, teams can gain valuable findings during testing and deliver quick feedback, leading to more efficient and effective testing cycles.

What’s next? Keep scrolling to find out:

🚀 Test Plan Overview - Define objectives and align with business goals and NFRs.

🚀 Testing Strategy - Focus on load, stress, and scalability testing.

🚀 Test Environment & Tools - Use tools like JMeter or k6 for accurate testing.

🚀 Metrics Monitoring - Track response time and throughput to spot issues.

🚀 Continuous Improvement - Integrate performance testing for ongoing optimization.

Overview of a Performance Testing Plan

Performance Testing Plan serves as the primary objective in evaluating an application's behaviour under varying conditions. It includes a detailed test plan and an exploratory test plan to outline testing types like load, stress, and scalability testing, ensuring the end-user experience remains smooth under stress. The plan incorporates Risk Identification, defines a detailed schedule, and leverages a management tool for planning and tracking. With configured automation software testing and comprehensive guides, it supports accurate simulations and efficient execution. A structured reporting process ensures actionable insights, helping to optimise both system performance and user experience.

Understanding Non-Functional Requirements (NFRs) in Performance Testing

Non-Functional Requirements (NFR) define how a software application behaves under specific conditions, complementing functional requirements. These are crucial for maintaining performance, stability, and security throughout the software testing process.

Why NFRS Matters:

NFRs impact testing scope and end-user satisfaction. Key software performance testing aspects include:

- Response Time: Measures system speed during user interactions.

- Scalability: Evaluates the ability to handle high traffic and complex systems.

- Reliability: Ensures consistent functionality with quick recovery from potential risks.

- Availability: Guarantees uptime in continuous testing environments.

Aligning NFRs with Business Goals:

An effective test plan aligns NFRs with business requirements to support a high-quality software experience. Using realistic test scenarios and continuous testing helps uncover potential performance issues early. Metrics & reporting and proper validation in production enable accurate insights, improve usability, and drive successful testing efforts across development phases.

Creating an Effective Performance Testing Strategy

Creating an effective performance testing strategy is crucial for ensuring that applications meet performance expectations under varying conditions. The strategy begins by defining clear objectives that align with business goals and non-functional requirements (NFRs). Identifying key metrics like response time, throughput, and scalability is essential for measuring success.

Next, choose the right performance testing tools, open source like JMeter script, k6 performance testing, or Performance Testing LoadRunner, based on project needs. Incorporating various non functional testing types, including load testing, stress testing, and endurance testing, ensures comprehensive coverage.

Set up a test environment that mirrors the production system for accurate results. The strategy should also define test scenarios, environments, and workloads to simulate real-world usage.

Analysing performance testing automation tools and integrating with your CI/CD pipeline enhances testing efficiency and consistency. Finally, in results and providing actionable insights through performance testing software, NFR analysis ensures the application delivers optimal performance.

Key Components of an Effective Performance Testing Plan Template

An effective performance testing in software testing service involves clear testing activities, focuses on critical functionalities, and follows a structured approach. Through effective test planning, visibility into coverage, and adaptable testing tools, teams address platform-specific issues, reduce manual effort, and gain deep insights into enterprise systems, ensuring comprehensive validation and robust quality operations.

Step 1: Define the Test Plan Overview

The Test Plan Overview is the initial phase in creating a performance testing plan, providing a high-level summary and outlining the reporting process to communicate results, aligning all stakeholders with the testing process.

- Scope: Define the systems and processes involved, including security testing and types of testing.

- Stakeholders: Identify key individuals (developers, testers, business leaders), using the project requirements, the project management tool to track Feedback on code changes.

- Goals: Set clear objectives, such as load handling and response time, with efficient module communication.

- Overview: Briefly describe the approach, expected results, and ensure Documentation of findings for future continuous deployment.

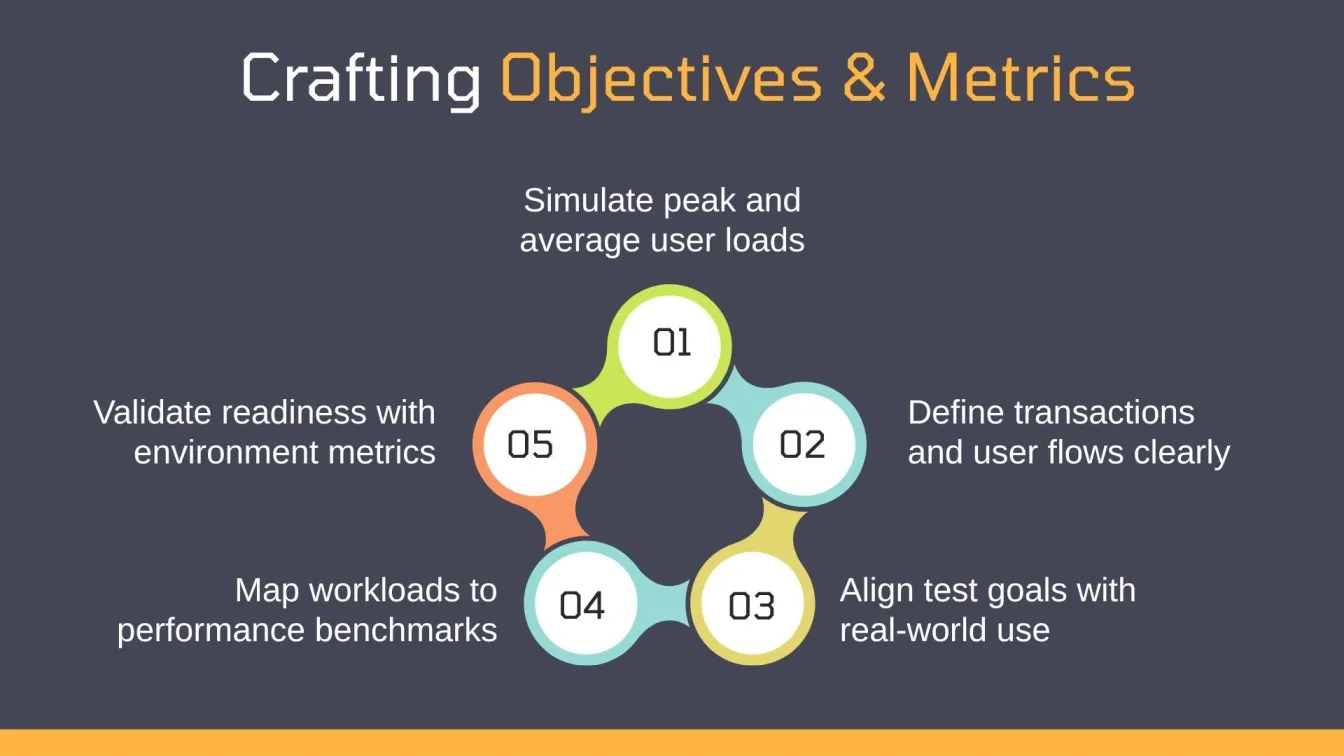

Step 2: Set Testing Objectives and Success Criteria

Creating test scenarios and workload models allows you to simulate real-world usage, ensuring the application performs under expected conditions and aligns with the overall approach to testing.

- Scenarios: Create different user behaviour scenarios, including peak and average load situations, factoring in diverse types of testing applicable to various testing projects.

- Workload Models: Define user distributions, transaction types, and test durations based on expected real-world usage while ensuring the scope of testing aligns with objectives set in the One-page agile test plan template.

- Testing Environment Simulation: Replicate the actual usage conditions to identify bottlenecks and issues early, ensuring results are logged using a management tool, with provisions for reviewing against Common suspension criteria and actual deployment readiness.

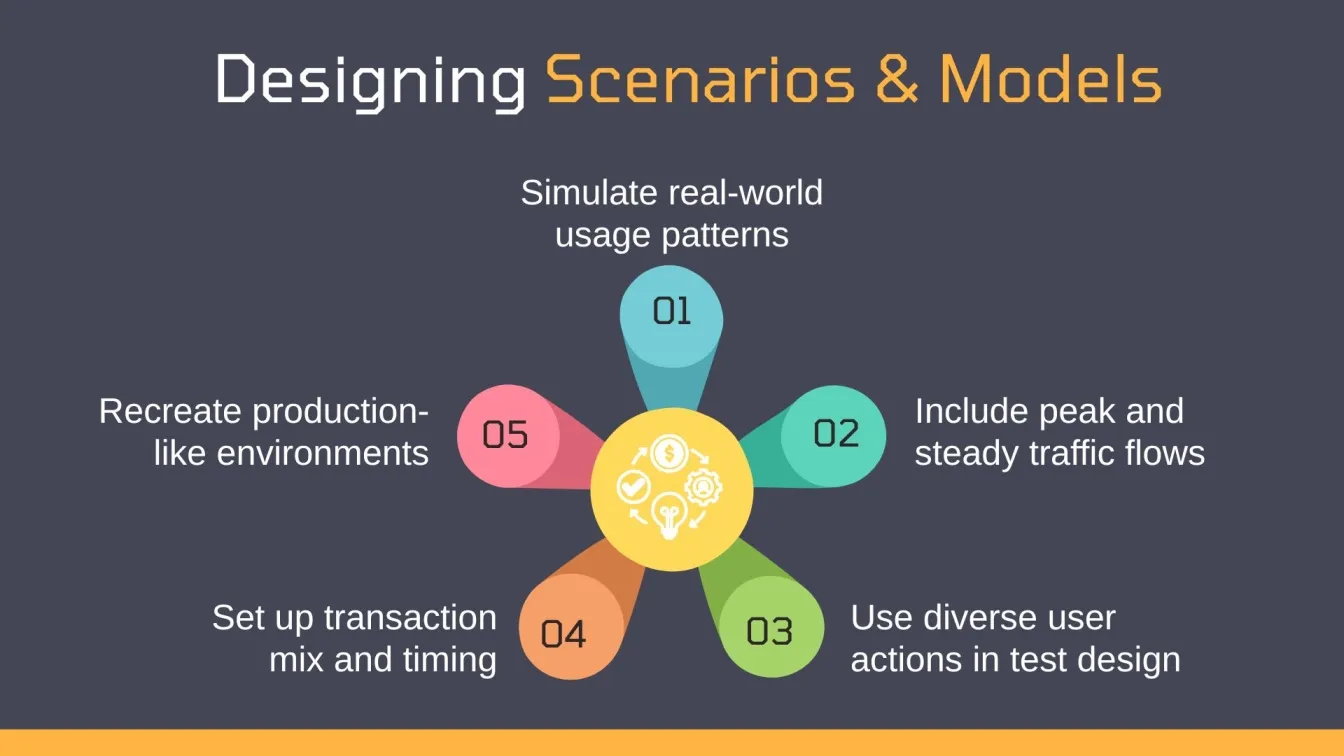

Step 3: Design Test Scenarios and Workload Models

Creating test scenarios and workload models allows you to simulate real-world usage, ensuring the application performs under expected conditions and aligns with the overall approach to testing.

- Scenarios: Create different user behaviour scenarios, including peak and average load situations, factoring in diverse types of testing applicable to various testing projects.

- Workload Models: Define user distributions, transaction types, and test durations based on expected real-world usage while ensuring the scope of testing aligns with objectives set in the One-page agile test plan template.

- Testing Environment Simulation: Replicate the actual usage conditions to identify bottlenecks and issues early, ensuring results are logged using a management tool, with provisions for document findings, and reviewing against Common suspension criteria and actual deployment readiness.

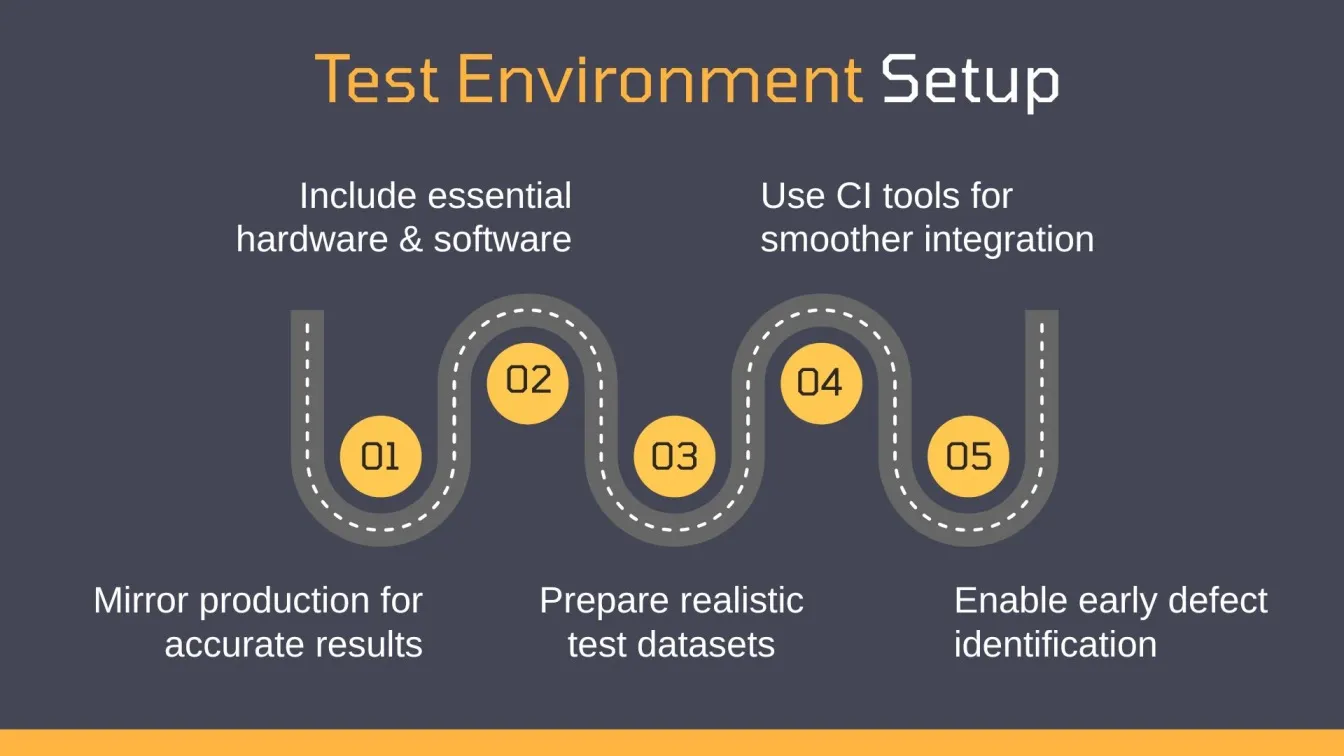

Step 4: Prepare the Test Environment Setup and Configuration

The test environment setup is essential to ensure that the tests are executed under controlled and consistent conditions, aligning with the overall planning efforts and ensuring the scope of testing defines what needs to be validated.

- Hardware & Software Configuration: Set up the necessary infrastructure, including servers, databases, and networks, while integrating with the development pipeline and Continuous integration tools.

- Test Data: Prepare representative test data that mimics production conditions and supports core functionalities, including compatibility testing and Usability testing.

- Environment Replication: Ensure that the test environment mirrors the production environment as closely as possible to provide accurate results and help with early defect tracking.

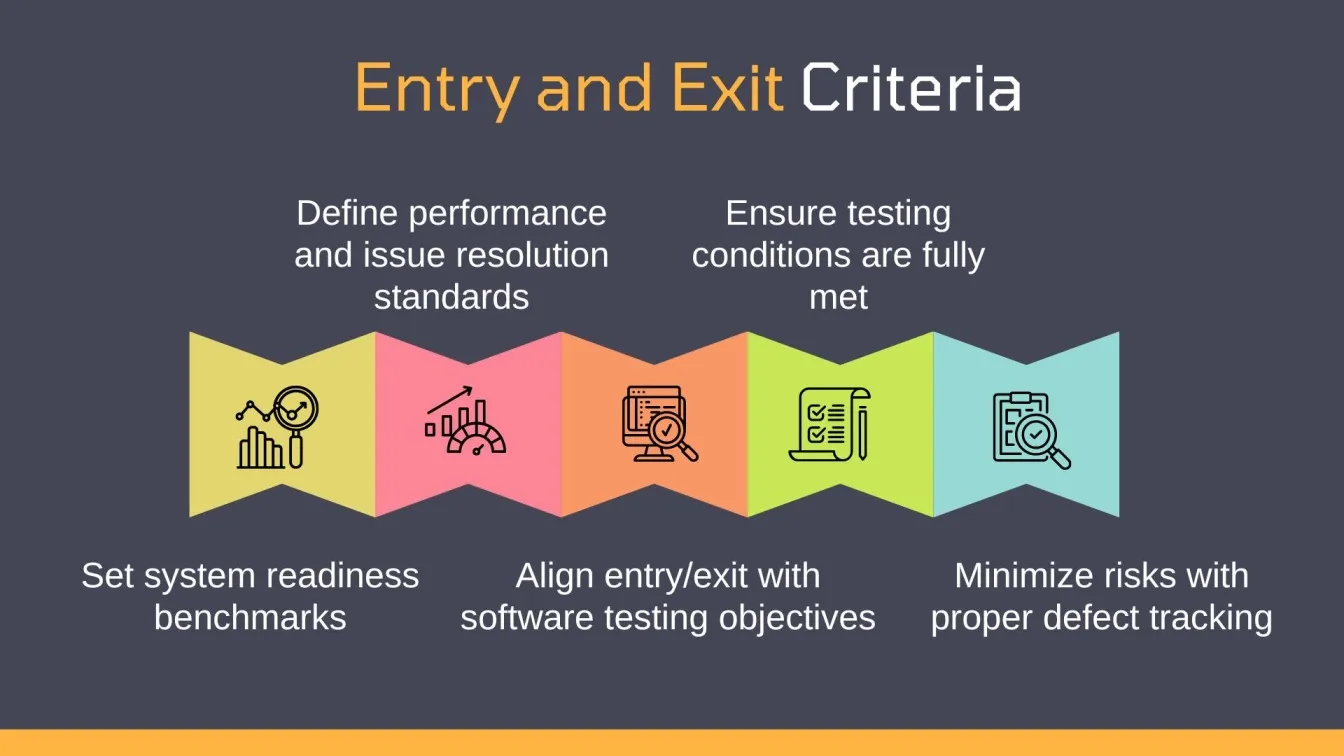

Step 5: Define Entry and Exit Criteria

Entry and exit criteria help ensure that testing starts and ends under the right conditions, providing clear guidelines on when to begin and conclude actual testing.

- Entry Criteria: Define prerequisites, such as system readiness, integration testing, and environment setup, based on the software test plan templates.

- Exit Criteria: Specify conditions that must be met to conclude testing (e.g., meeting performance benchmarks, resolving critical issues) while aligning with the planning process.

- Test Readiness: Ensure all conditions for testing are fully met before proceeding, supporting overall end-user experience and avoiding risks noted in defect tracking.

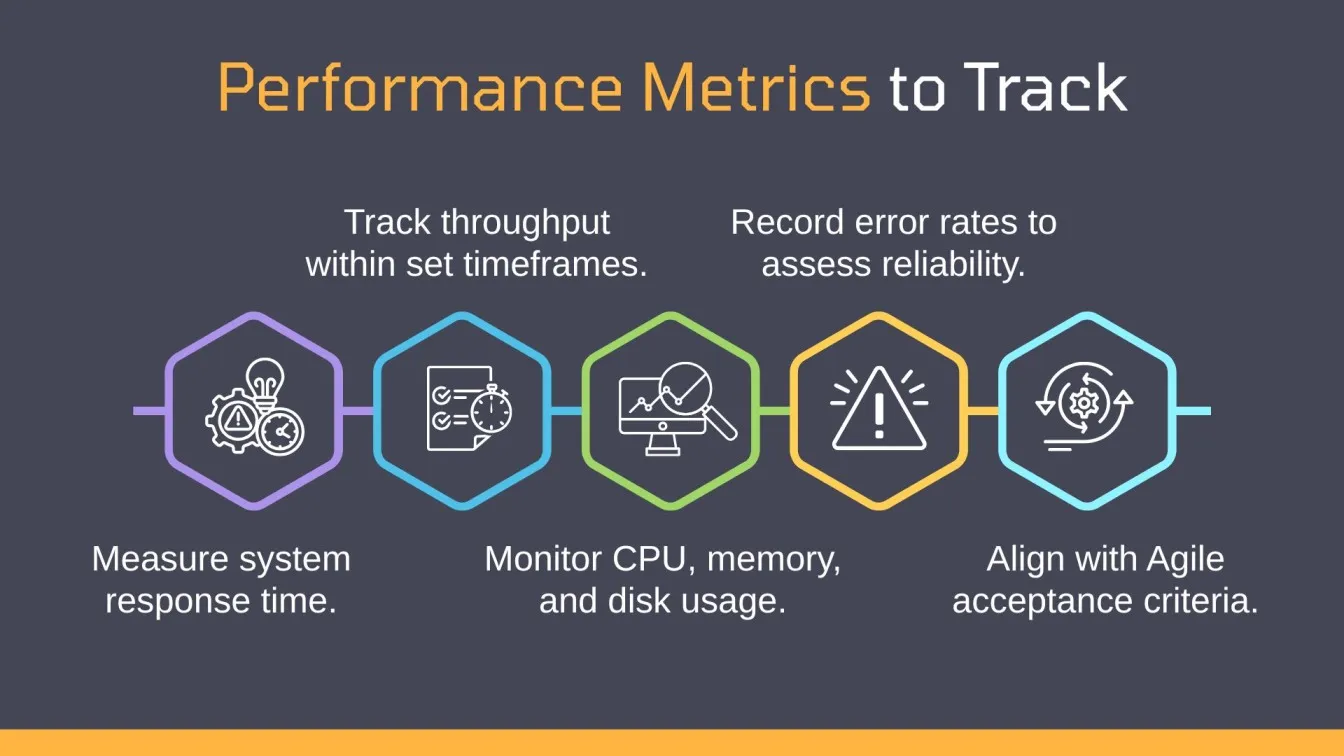

Step 6: Identify Performance Metrics to Track

Tracking the right performance metrics is vital for assessing system behaviour during tests and ensuring that goals align with the primary objective of the comprehensive test plan.

- Response Time: Measure how long it takes for the system to respond to requests using both modern and traditional testing methods.

- Throughput: Track the number of requests processed within a specific timeframe, as defined in the Acceptance criteria of your Agile development process.

- Resource Utilisation: Monitor CPU, memory, and disk usage during tests to identify bottlenecks, while ensuring efficient use of testing resources.

- Error Rates: Track failures or unexpected issues that occur during testing to gauge system reliability.

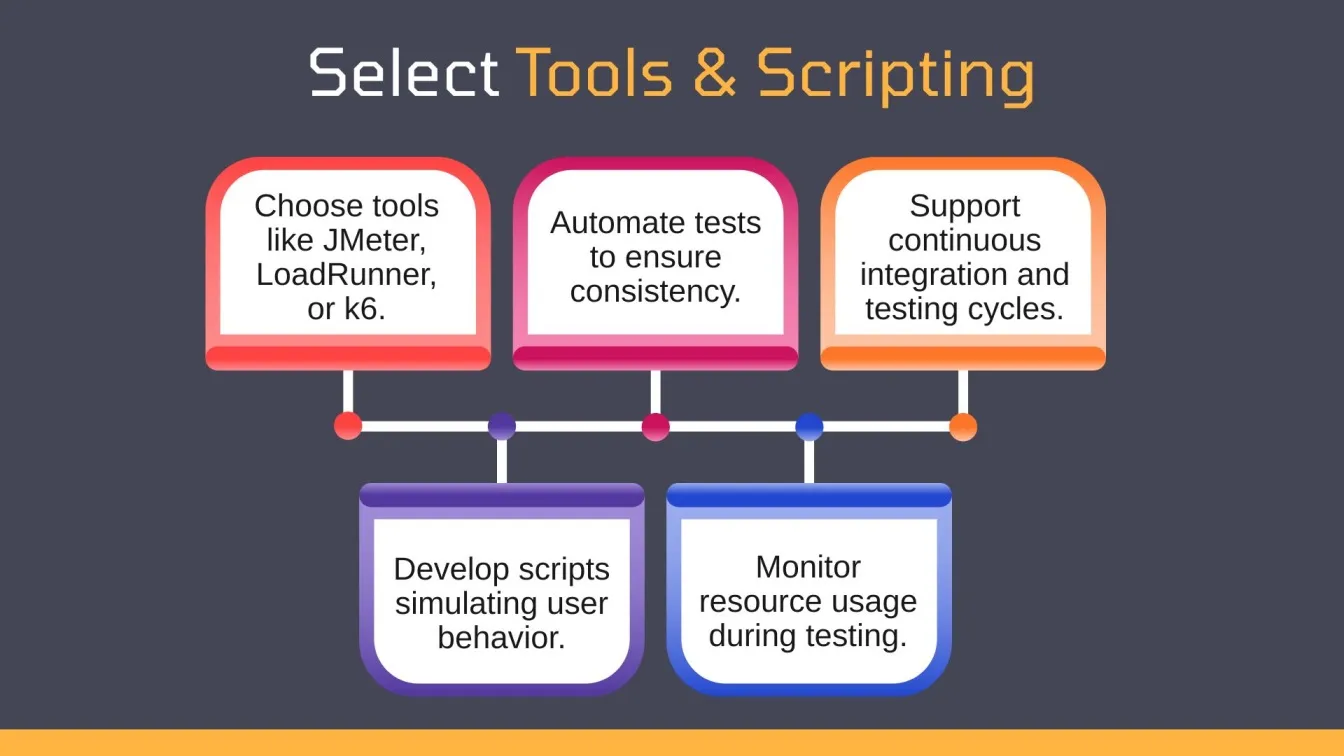

Step 7: Choose Tools and Develop Scripts

Selecting the appropriate load performance testing tools and developing scripts allows you to automate and streamline the testing process.

- Tools: Choose performance testing tools like Apache JMeter, LoadRunner, or k6 based on your needs, aligning with the overall strategy in software testing and supporting Continuous integration.

- Scripting: Develop scripts to simulate real-user behaviour, including navigation, transactions, and interactions between systems, while considering compatibility testing and User acceptance testing.

- Automation: Use automation testing tools to run tests multiple times and ensure consistency in results, enabling better testing sessions and optimising resource usage monitoring.

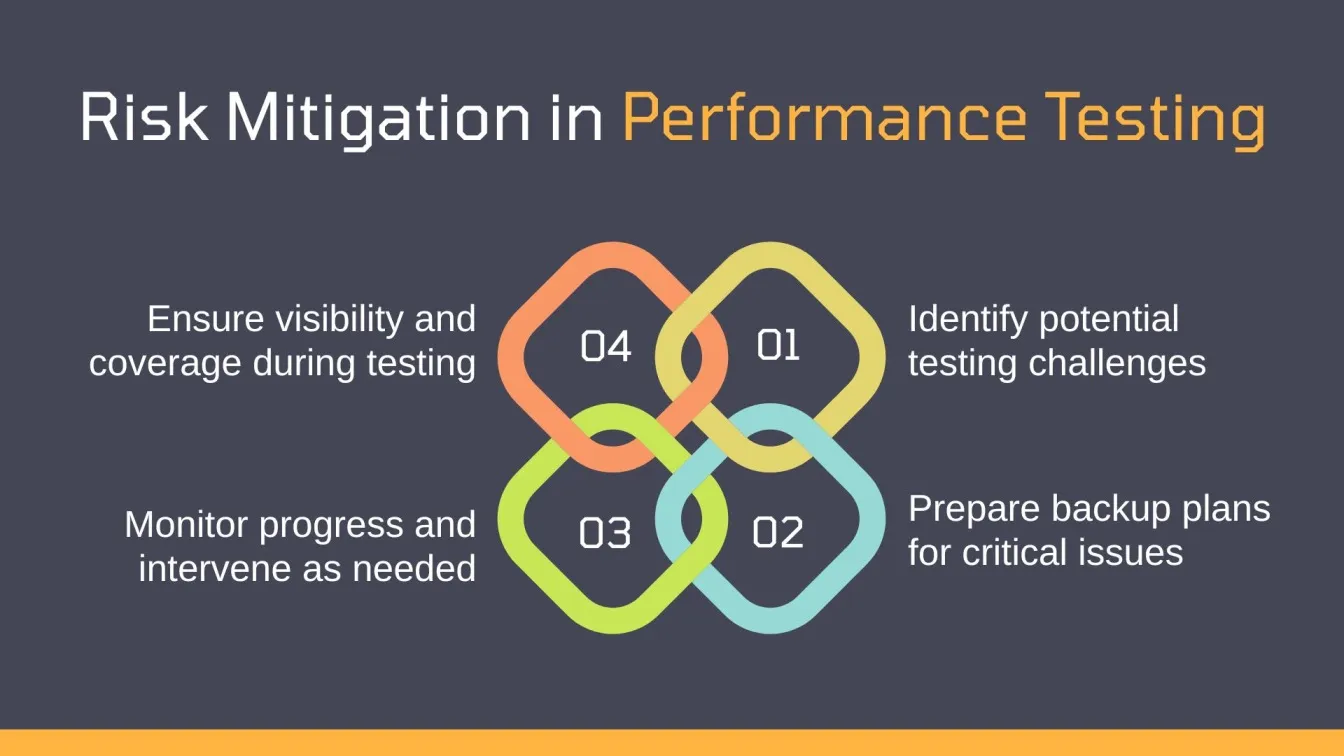

Step 8: Create a Risk Mitigation Strategy

A risk mitigation strategy is essential for addressing potential issues that might arise during the performance testing process.

- Identify Risks: Analyse possible challenges like tool failures, system downtime, or resource limitations, which may lead to critical system failures.

- Mitigation Plans: Prepare strategies to address these risks, including backup plans and contingency resources, utilising adaptable testing tools and advanced test management features.

- Monitoring: Continuously monitor the progress of testing and intervene if any risks impact the testing process, ensuring visibility into coverage and maintaining user requirements.

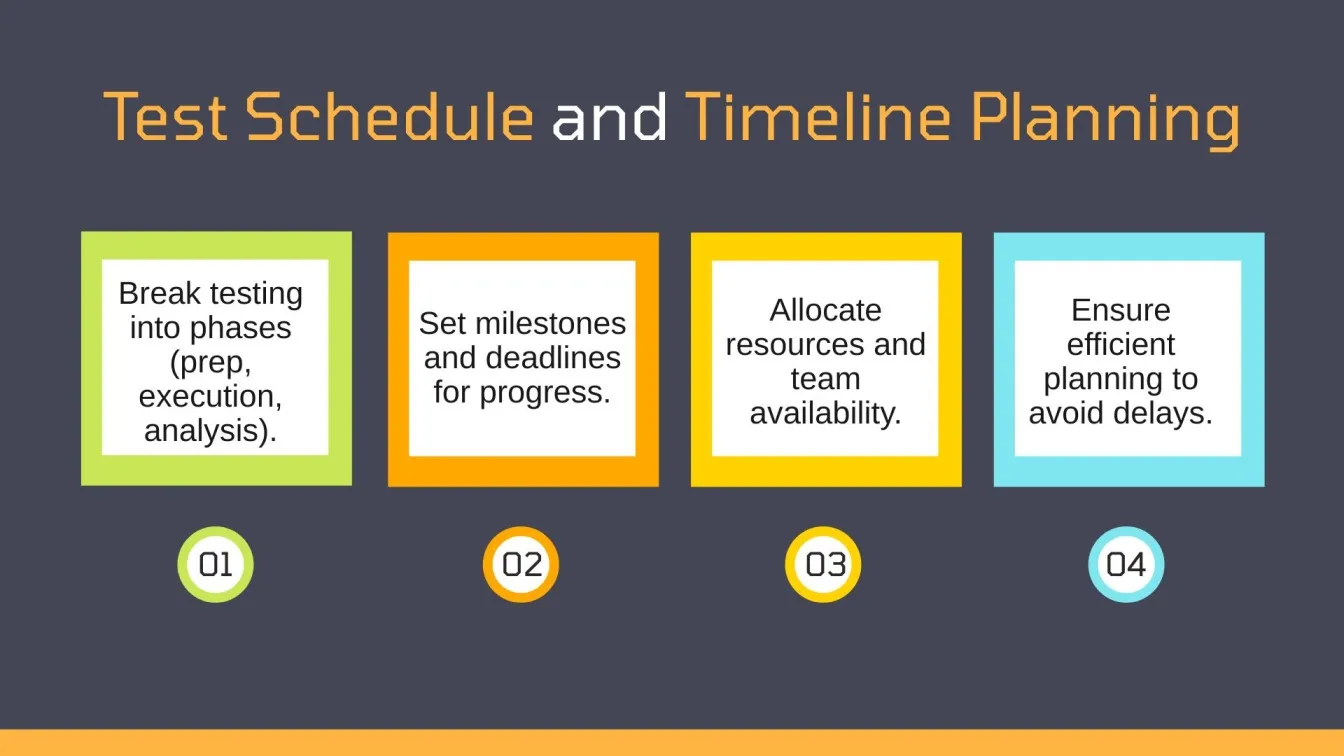

Step 9: Develop the Test Schedule and Timelines

Creating a test schedule and clear timelines ensures that performance testing is completed within the planned duration.

- Test Phases: Break the testing process into manageable phases (e.g., preparation, execution, analysis), aligning with testing phases and testing methodologies.

- Milestones: Set key milestones and deadlines to ensure progress is tracked, addressing scope creep, and keeping the project within high-level objectives.

- Resource Allocation: Plan resources and team availability to avoid delays and bottlenecks during testing, utilising the development team and ensuring efficient planning efforts.

Step 10: Build a Reporting and Analysis Plan

A reporting and analysis plan ensures the proper documentation and review of test results for actionable insights.

- Report Format: Define the structure of reports, including graphs, tables, and results from manual testing and automated testing.

- Key Metrics: Focus on response time, throughput, resource usage, and risk assessment.

- Analysis: Identify bottlenecks, trends, and opportunities for improvement, considering both testing methods and testing focus.

- Stakeholder Communication: Ensure that findings are communicated clearly to stakeholders with actionable recommendations, supporting the reporting process and providing visibility for development.

Analyzing and Interpreting Performance Testing Results

Analysing performance testing results is essential for verifying that the application meets non-functional requirements and business goals. It ensures core functionalities and high-level objectives are validated under realistic test scenarios.

- Data Collection: Capture key metrics like throughput, resource usage, and error rates using web performance and load testing tools, including usability testing and interaction scenarios.

- Benchmark Comparison: Compare test data with predefined quality standards to identify gaps, incomplete coverage, and platform-specific issues. Software test plan templates help define the scope of testing and critical test scenarios.

- Identifying Bottlenecks: Use performance testing tools to detect critical defects like slow response or high resource use. Integration testing and exploratory testing can uncover issues across entire system functions and complex systems.

- Trends and Root Cause: Identify trends in scalability or reliability issues. Use testing software, automated software testing tools, and software testing solutions for root cause analysis to support risk assessment and defect management.

- Actionable Insights: Use insights to guide improvements and resolve usability issues, unresolved issues, or compatibility problems. Quick feedback, regular communication, and a clear testing timeline ensure continuous testing and efficient execution.

Common Mistakes to Avoid When Creating a Performance Testing Plan

An effective performance testing plan is essential for successful testing. However, several mistakes can lead to incomplete or inaccurate results.

- Lack of Clear Objectives: Without clear goals like resource usage, error rates, or defined load testing software criteria, the impact of software testing services, automated testing tools, and load performance testing tools.

- Ignoring Non-Functional Requirements (NFRs): Not aligning tests with non functional testing factors like scalability and reliability can lead to missing critical performance areas, resulting in critical defects in enterprise systems.

- Inadequate Test Environment Setup: A poorly configured test environment leads to inaccurate results. It’s important to replicate the development environment, perform compatibility testing, and ensure proper documentation of feedback and code quality checks.

- Overlooking Peak Load Testing: Testing only under average conditions and ignoring a load testing tool can result in critical system failures during high traffic, and miss high-risk areas.

- Insufficient Reporting and Analysis: Failing to document and analyse results leads to missed key findings. Structured defect tracking and regular communication are crucial for rapid feedback and unresolved issues.

Conclusion: Best Practices for Optimal Performance Testing Results

In conclusion, performance testing remains a vital part of the software development process, ensuring applications function efficiently under expected and peak loads. A clear testing approach backed by a defined testing schedule allows testing teams to focus on performance-related goals such as response time, scalability, and throughput. Using the right non-functional testing types, including load testing, stress testing, and scalability testing, helps uncover critical defects and potential performance issues early.

A well-defined test plan that integrates realistic test scenarios provides accurate insights into how the application behaves in real-world scenarios. Aligning performance goals with business requirements and non functional testing techniques ensures your system meets both technical and end-user expectations.

An efficient test management platform supports effective test execution, manages defect reports, and provides visibility into testing progress. By addressing incomplete test scenarios, improving focused testing, and streamlining the project plan, teams can deliver high-quality software that aligns with overall objectives. Ultimately, following best practices and maintaining continuous testing throughout the development phase leads to reliable and high-performing applications.

Frugal Testing is a renowned SaaS application testing company, offering a range of specialized services to enhance your application's quality and performance. The services offered by Frugal Testing include comprehensive load testing services, designed to assess your application's performance under high traffic conditions. In addition, Frugal Testing leverages AI-driven test automation services to ensure faster, more accurate testing with minimal human intervention. By combining advanced testing methodologies with AI technology, Frugal Testing guarantees robust and reliable results for your SaaS applications, enabling you to deliver seamless, high-quality software to your users.

People Also Ask

What is RTM in testing?

RTM (Requirements Traceability Matrix) ensures all requirements are linked to corresponding test cases for complete coverage.

What are the assumptions of the test plan document?

Assumptions include factors like resource availability, system configurations, and test data needed for execution.

What is the role of caching in performance testing?

Caching improves performance by storing frequently accessed data, reducing response times during testing.

What is a workload model in performance testing?

A workload model simulates real user activity to evaluate system performance under realistic load conditions.

How can performance bottlenecks be identified?

Bottlenecks can be identified by analyzing system metrics such as CPU, memory usage, and response times during testing.

.webp)

%201.webp)