Visual search has transformed from a futuristic concept into an everyday consumer experience, and few companies embody this shift better than Pinterest. What started as a digital pinboard for inspiration has quietly become one of the world’s most sophisticated AI-powered visual discovery engines, serving over 465 million monthly active users globally.

At the heart of Pinterest’s success lies a powerful integration of computer vision, machine learning, and large-scale data infrastructure. From recognizing a coffee table in a photo to suggesting style pairings, Pinterest’s AI systems are designed not just to classify objects but to predict intent, inspire discovery, and drive commerce.

In this blog, we’ll break down the architecture, models, and testing frameworks behind Pinterest’s visual search engine exploring how it scales across billions of images, powers e-commerce, and sets the stage for the future of AI-driven recommendations.

This deep dive will cover:

📌 How Pinterest scales AI-powered visual search across billions of pins worldwide.

📌 Machine learning and image recognition testing for Pinterest Lens, shoppable pins, and product recommendations.

📌 Best practices for delivering relevant, personalized, and low-latency visual discovery experiences.

📌 Future trends in AI-driven visual search, multimodal embeddings, and personalized commerce.

Introduction to Pinterest’s AI Visual Search

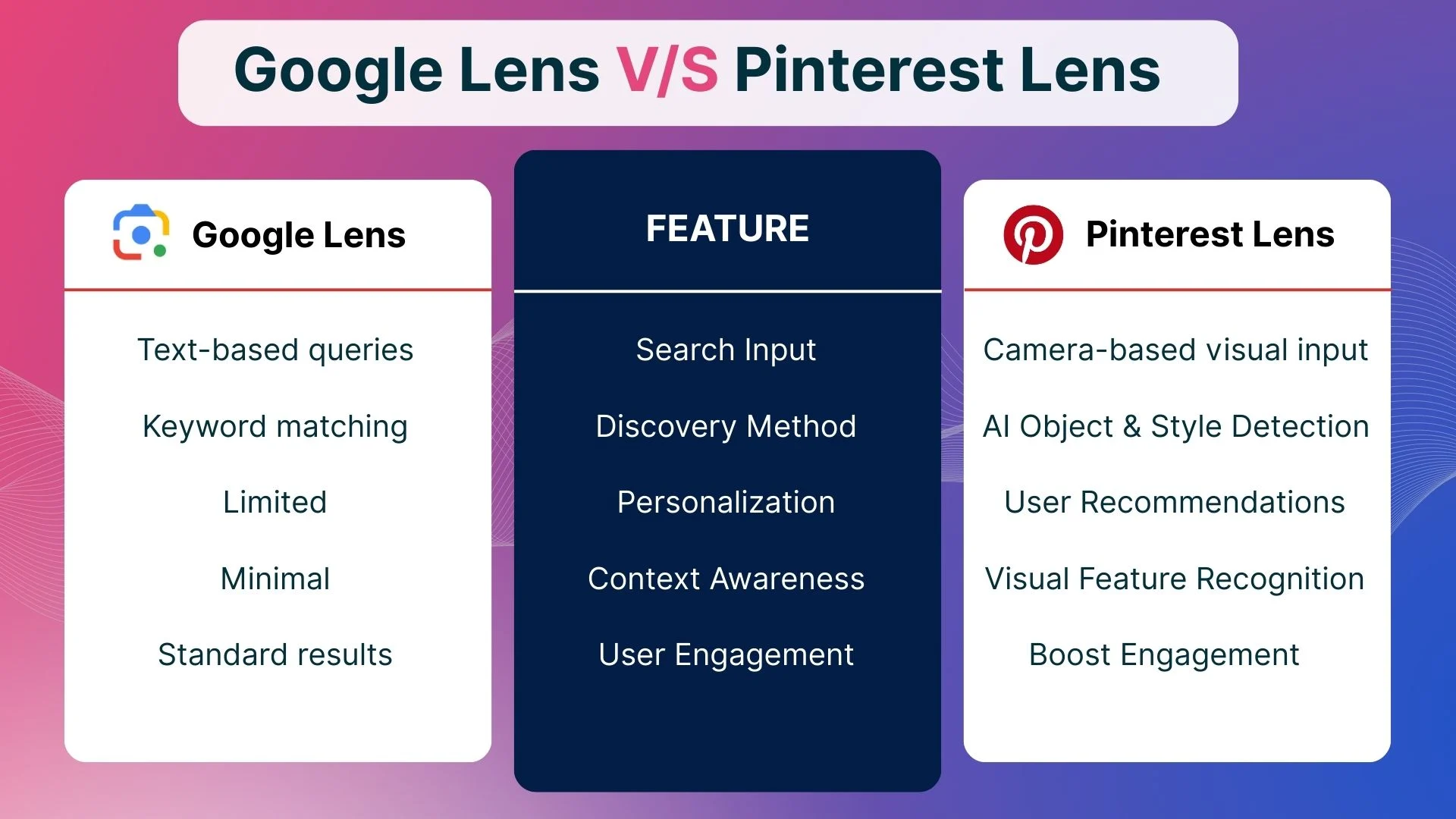

Pinterest is more than a social network, it's a next-generation visual search engine powered by machine learning algorithms and visual recognition technology. Unlike text-first platforms like Google, Pinterest allows images to serve as the query itself. Users can snap, upload, or tap on a photo to discover related content, style ideas, product pins, shoppable pins, and personalized recommendations.

By leveraging Visual Language Models, Visual Lens, and multimodal search, Pinterest delivers globally relevant experiences across the U.S., EU, and Asia, enabling users to explore inspiration while moving with GDPR, CCPA, and local data privacy laws. This AI-driven discovery transforms every image into actionable insights for both users and businesses, bridging visual commerce and content curation on a global scale.

Why visual discovery matters for user engagement and retention

The Pinterest Lens tool allows users to point their smartphone at anything, say a chair in a café and instantly discover similar products or style ideas. This shift from keyword search to visual discovery reduces friction, making the user journey more intuitive.

Data shows that visual search increases engagement and session length by helping users refine ideas visually rather than textually. This is crucial for retention in markets like the U.S., Europe, and Asia, where e-commerce is driven heavily by style curation and personalized suggestions.

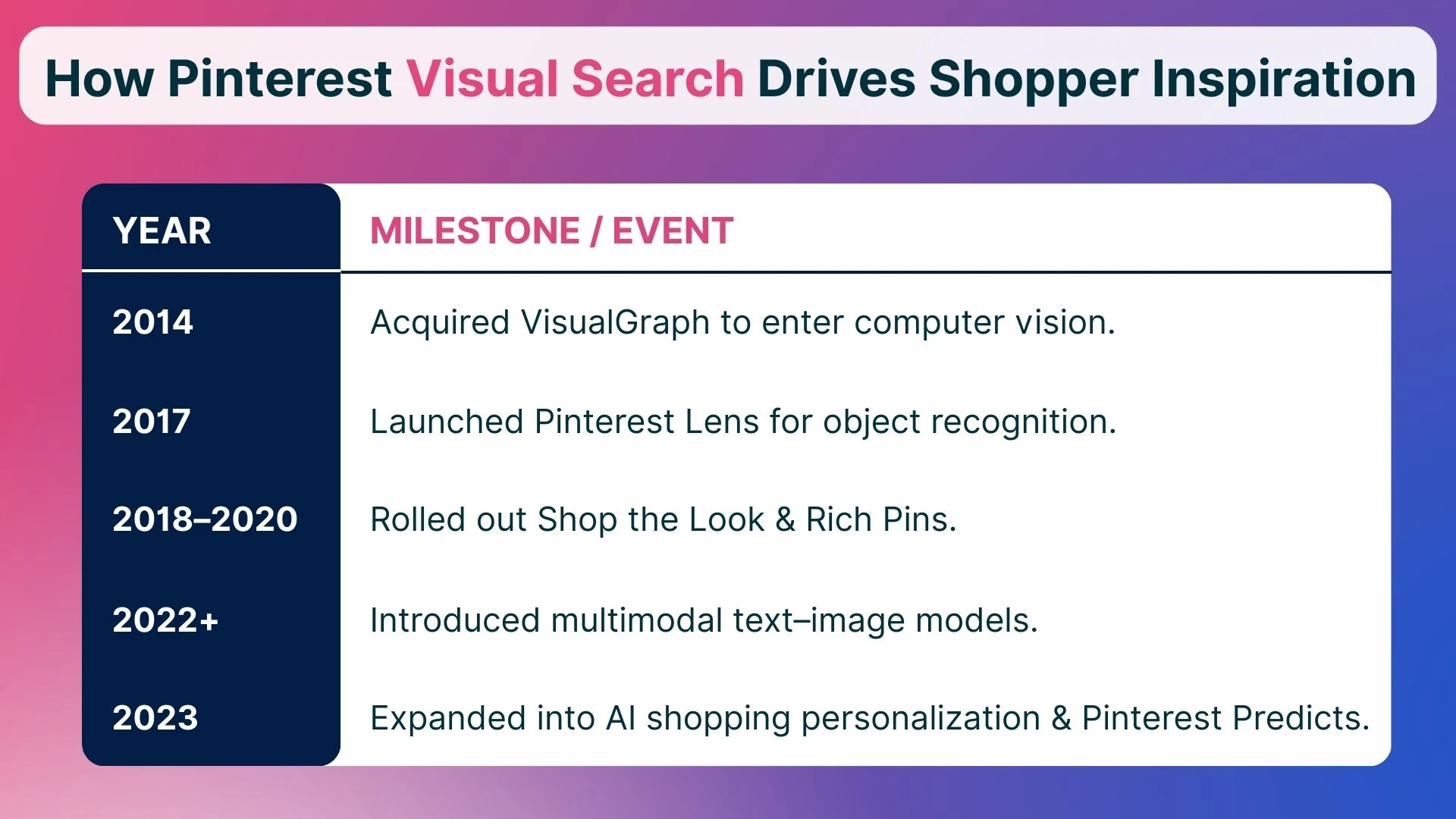

Key milestones in Pinterest’s computer vision journey

Pinterest’s rise to becoming a leader in AI-powered visual discovery has been gradual but strategic. The company made bold moves through acquisitions and product launches that steadily turned it from a simple inspiration board into a global visual search engine.

Each of these milestones reflects Pinterest’s vision to make search more intuitive, discovery more personal, and commerce more seamless. Together, they mark the evolution of a platform that doesn’t just understand images it understands intent.

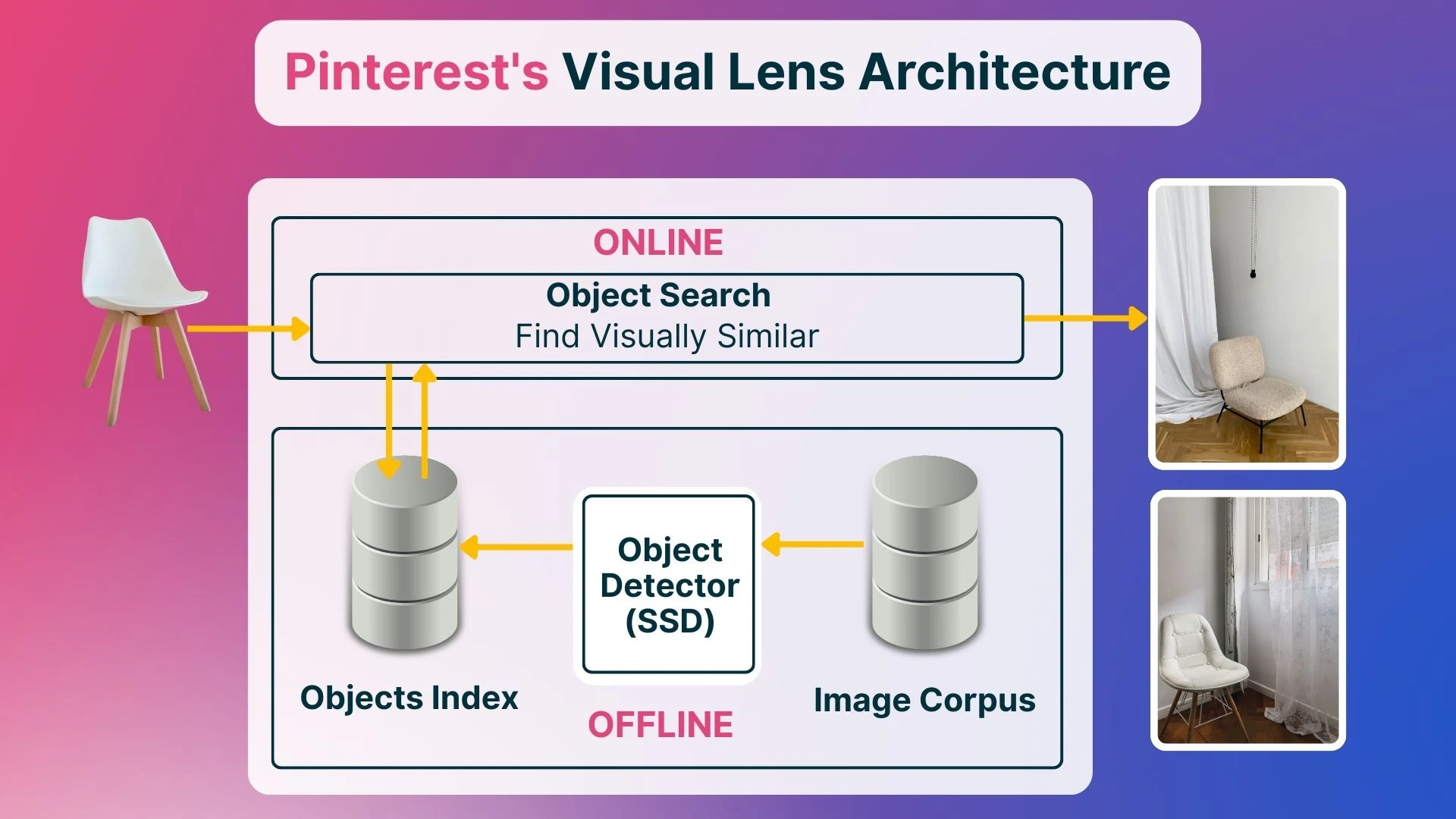

Core Architecture of Visual Search Engine

Behind every pin, click, and search lies a massive AI engine designed to handle visual data at an unprecedented scale. Visual search technology is built on an AI-first architecture that must analyze billions of images in real time while still delivering fast, relevant, and high-quality results. This requires a combination of deep learning models, embedding systems, and distributed infrastructure working seamlessly together.

Neural networks powering large-scale image understanding

At the core of Pinterest’s visual search are deep convolutional neural networks (CNNs), optimized to detect objects, textures, and visual patterns. Models like Faster R-CNN and ResNet variants are trained on Pinterest’s massive dataset of labeled pins, enabling large-scale object detection and segmentation.

Using bounding boxes and image crops, Pinterest can identify multiple objects within a single pin such as a lamp, rug, and sofa in a living-room photo creating a structured visual vocabulary for search and personalized recommendations.

Embedding models for content-based search and recommendations

Pinterest’s visual search architecture is powered by advanced embedding models that map images into high-dimensional vector spaces. This allows visually similar items to cluster together, enabling fast and accurate content-based retrieval at scale.

Key elements of Pinterest’s embedding approach include:

- Multimodal embeddings: Combine image vectors with text metadata (captions, pin titles) for richer, more relevant results.

- Personalized embeddings: Adapt to each user’s history to create curated beauty boards, style ideas, or recipe feeds.

- Scalable vector search: Use distributed Approximate Nearest Neighbor (ANN) systems for real-time retrieval across billions of pins.

Together, these techniques make Pinterest’s AI-powered discovery both personal and scalable, delivering relevant content instantly to users worldwide.

Machine Learning Models Driving Image Recognition Accuracy

For Pinterest, precision in visual search is crucial for user trust. Even small errors like showing a curtain instead of a dress can disrupt the experience. Pinterest uses advanced AI, machine learning, and visual recognition across billions of pins, with strict privacy practices to keep results accurate and globally relevant

- Convolutional Neural Networks (CNNs): Extract detailed image features at scale.

- Visual Language Models & Multimodal Embeddings: Identify objects and context with high accuracy.

- Personalized Recommendations: Power product pins, related content, and shoppable pins tailored to each user.

- Global Compliance: Maintain relevance across the U.S., EU, and Asia while meeting data privacy and security laws.

This approach creates a seamless, globally optimized discovery experience, making Pinterest’s visual search both inspirational and actionable.

Convolutional networks and feature extraction techniques

Pinterest employs advanced CNNs and Vision Transformers (ViTs) for feature extraction, capturing not just shapes but style, context, and intent. For example, distinguishing between “office chair” and “accent chair” requires fine-grained classification across product categories.

These models leverage transfer learning from large-scale open datasets (like ImageNet) and are retrained on Pinterest’s visual data, ensuring relevance to Pinterest’s ecosystem of fashion, home, and lifestyle content.

Balancing precision with recall in search relevance

Balancing recall (surfacing many relevant images) and precision (showing only the most accurate matches) is one of the toughest challenges in AI-powered visual search. Too much recall overwhelms users; too much precision can block discovery. Pinterest tackles this trade-off with a layered approach that keeps search results both inspirational and actionable.

How to optimize recall and precision:

- Uses advanced embedding models for broad image retrieval.

- Applies a precision reranker to surface the most relevant pins.

- Leverages metadata-driven refinements like product tags, category labels, and user-generated crops.

- Incorporates human-in-the-loop feedback for continuous improvement.

This multi-stage process ensures contextually relevant visual search results, whether users are casually browsing style ideas or making high-intent e-commerce queries.

Data Infrastructure and Scalability Challenges

Pinterest’s AI-powered visual search operates at an extraordinary scale processing billions of pins, petabytes of visual data, and millions of searches every day across the globe. Delivering fast, accurate results in real time requires a robust, distributed data infrastructure that can handle both the volume and complexity of visual content.

Handling Billions of Images with Distributed Systems

Pinterest’s visual search engine manages billions of images globally, ensuring fast and seamless user experiences. Its multi-layered, distributed backend transforms raw visual data into structured insights:

- HBase and Hadoop clusters: Batch processing of massive datasets.

- Kafka streams: Capture and process real-time user interactions.

- GPU-based clusters: Train deep learning models on visual data.

Regional data centers in the U.S., Europe, and Asia provide low-latency access while complying with GDPR, CCPA, and China’s cybersecurity laws, making Pinterest’s visual search both fast and secure.

Real-time indexing and search performance optimization

Every pin on Pinterest is not just stored - it’s analyzed, indexed, and made instantly discoverable. The platform’s architecture ensures fast, accurate search results while continuously adapting to user behavior and trends.

Key components of Pinterest’s real-time search system:

- Incremental Indexing Pipelines: Continuously update results without waiting for full batch processing.

- Vector Databases: Store complex image embeddings for efficient content-based retrieval.

- Caching Layers: Redis and Memcached serve frequent queries almost instantly.

- Dynamic Refinements: User signals and refinement bars fine-tune recommendations for each individual.

Together, these systems allow Pinterest’s AI-powered visual search to respond in real time, adapt to seasonal trends and regional shopping habits, and deliver a highly personalized, engaging, and reliable discovery experience worldwide.

Testing and Evaluation of Image Recognition Models

Building a world-class visual search engine at Pinterest’s scale requires more than just powerful AI - it demands rigorous testing and evaluation to ensure models perform reliably across diverse users, devices, and regions. Without this careful validation, even the most advanced models could surface irrelevant or misleading results, undermining user trust and engagement.

Benchmarking Methods for Visual Search Reliability

Frugal Testing provides cost-effective QA testing services by combining software testing services, agile QA, QA outsourcing services, QA services and test automation services. Their expertise spans end-to-end software testing, enabling businesses to accelerate development while ensuring robust performance, security, and compliance. With innovative tools like test automation frameworks and scalable solutions, Frugal Testing makes software testing efficient, reliable, and results-driven.

Pinterest measures model performance using a suite of metrics designed for both accuracy and efficiency. Key evaluation metrics include:

- mAP (mean Average Precision) – for object detection accuracy.

- NDCG (Normalized Discounted Cumulative Gain) – for ranking relevance.

- Recall @ K – to measure how often the correct item appears in the top-K results.

- Precision @ K – to evaluate the proportion of relevant items in the top-K results.

- Latency benchmarks – to track response times under peak loads.

- Throughput – to assess the number of requests handled per second.

- Cross-regional performance – ensures models remain fair and reliable across US, EU, and APAC user bases, mitigating bias in recommendations.

These cross-regional evaluations are essential for Pinterest’s AI-powered shopping and discovery experiences, where visual relevance drives engagement and conversions.

Continuous Training Pipelines for Improved Accuracy

Pinterest’s AI doesn’t stay static. Every new pin, user click, and search interaction feeds into a continuous training loop, allowing models to evolve in real time.

- New pins to real-time user interactions then to retraining datasets.

- A/B testing new models in controlled experiments.

- Feedback loops to capture user corrections (e.g., “not relevant” reports).

User feedback, like “not relevant” reports, helps fine-tune Pinterest’s AI, improving recommendation accuracy, visual recognition, and engagement over time.

With benchmarking, cross-regional testing, and continuous model updates, Pinterest sustains a state-of-the-art visual search engine that scales globally while delivering personalized, accurate, and engaging results.

User Experience Impact of AI-Powered Search

At its core, Pinterest’s AI-powered visual search is designed to guide users seamlessly from inspiration to action. By combining computer vision, deep learning, and personalized recommendations, the platform transforms every interaction into a curated discovery journey. Users can explore ideas while uncovering products, trends, and styles that resonate with their personal preferences, making the experience both intuitive and engaging.

Personalized Recommendations Through Visual Similarity

Pinterest’s recommendation engine goes beyond simple object recognition. By integrating visual similarity models with advanced personalization layers, the platform tailors each user’s experience. Features include:

- Home Feed Personalization: Suggests pins based on individual browsing and engagement history.

- Rich Pins with Metadata: Adds context such as product categories, style information, and pricing.

- Style Reading Technology: Curates personalized boards for outfits, home décor, or lifestyle inspiration.

The result is a single pin transforming into a multi-step, personalized journey, making Pinterest feel less like a tool and more like a curated assistant that understands user intent.

Driving E-Commerce Conversions with Computer Vision

Pinterest has evolved into a visual commerce platform, where AI-powered visual search connects discovery directly with shopping. Key features include:

- AI-Powered Shopping: Detects products within lifestyle images and links them to merchants.

- Visual Similarity Technology: Suggests alternative items in the same product category, increasing purchase options.

- Advertising Ecosystem: Through Pinterest Ad Labs, brands benefit from performance targeting, CPC optimization, and analytics tools, enabling measurable ROI.

This ecosystem transforms Pinterest from a simple inspiration board into a scalable visual commerce engine, boosting both user engagement and revenue. By combining AI-driven recommendations, object detection, and personalized visual search, Pinterest helps users discover what they love and where to buy it seamlessly.

Conclusion: The Future of Pinterest’s Visual Search and AI Innovation

Pinterest’s journey from a simple pinboard to an AI-powered discovery engine demonstrates the impact of visual recognition technology and machine learning algorithms on how users interact with content, brands, and commerce. With Visual Language Models and multimodal search, Pinterest understands both objects and context in images, powering Visual Lens, product pins, shoppable pins, and color palette detection for effortless discovery of related content.

Advanced visual detection and extraction of visual features enable highly personalized recommendations, while artificial intelligence drives seamless visual commerce and engagement. Looking ahead, augmented reality, generative AI, and multimodal embedding models promise even richer global experiences, making Pinterest a leader in AI-powered search, personalized recommendations, and visual shopping.

People Also Ask

👉How does Pinterest ensure visual search accuracy across billions of images?

Pinterest uses CNNs, multimodal embeddings, and rigorous testing (unit, integration, regression, A/B) across regions, complying with GDPR, CCPA, and local privacy laws.

👉What are the main challenges in testing Pinterest’s image recognition system?

Challenges include billions of images, low-latency indexing, and bias. Pinterest uses mAP/NDCG benchmarks, cross-regional testing, and human-in-the-loop feedback to ensure reliability.

👉How does Pinterest balance AI performance with user experience?

By combining GPU training, vector databases, parallel processing, and user signals, Pinterest balances precision and recall for fast, globally relevant results.

👉Can Pinterest’s visual search detect subtle similarities between images?

Yes. Visual Language Models and multimodal embeddings capture patterns, colors, and textures to surface visually similar images, style boards, and product pins.

👉What innovations could improve Pinterest’s visual search in the future?

Future updates may include augmented reality, generative AI, enhanced multimodal search, and upgraded Visual Lens, ensuring AI relevance across the U.S., EU, and Asia.

%201.webp)