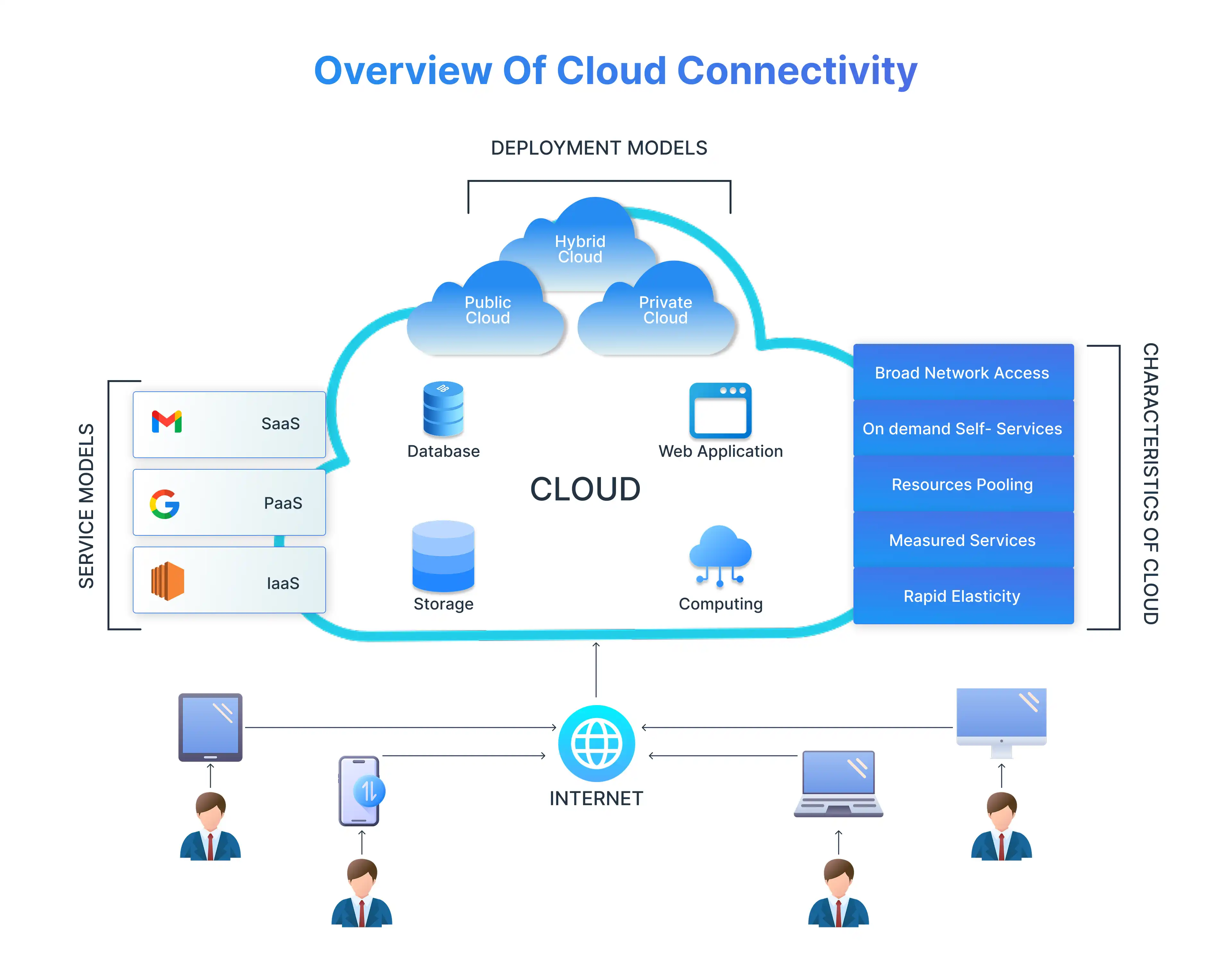

For organisations moving to the cloud, the goal is clear: achieving better scalability, agility, and performance. However, successful cloud migration goes far beyond transferring workloads — it’s about ensuring systems continue to operate accurately, securely, and efficiently after the move. This is where partnering with an end-to-end cloud performance testing company becomes the real difference-maker.

As enterprises embrace hybrid, private, and multi-cloud architectures, including platforms like the Google Cloud Platform, system complexity rises significantly. Without structured validation, issues such as data inconsistencies, degraded performance, or security vulnerabilities can disrupt operations and affect business continuity. Implementing cost-effective cloud testing solutions helps mitigate these risks—enabling smooth transitions, optimized workloads, and sustained performance across dynamic cloud environments.

There are different types of cloud migration—such as rehost, replatform, refactor, or replace—and each requires a tailored testing approach to ensure both technical accuracy and operational stability.

Testing ensures the transition works — not just technically, but operationally.

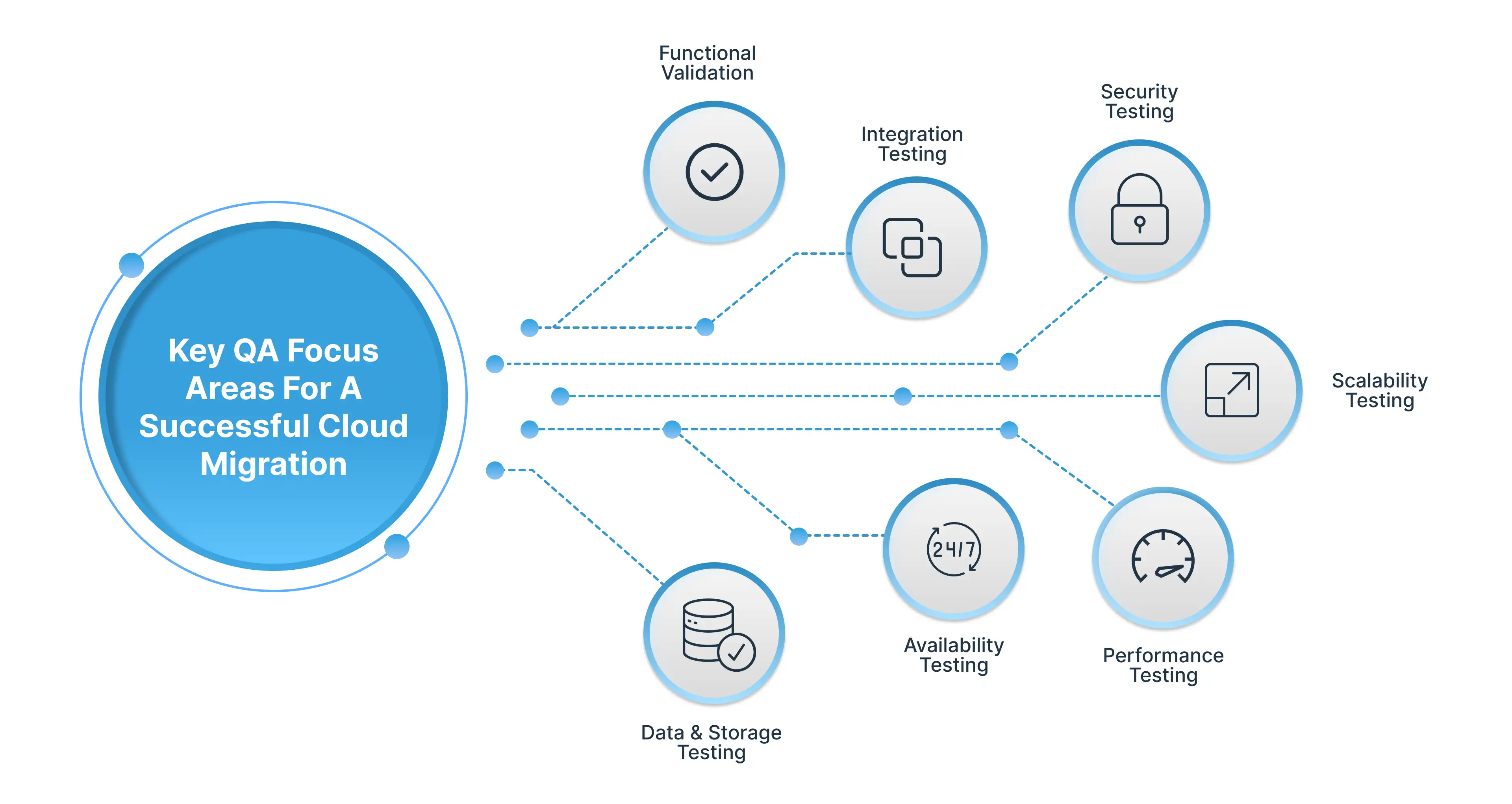

This blog explores how organisations can ensure a smooth and reliable cloud migration by focusing on five key areas:

- Assessing migration risks and testing priorities

- Validating network, identity, and data flows

- Ensuring performance, scalability, and cost efficiency

- Securing cloud environments and maintaining compliance

- Embedding automated testing into CI/CD pipelines for continuous validation

Understanding the Migration Risk Profile and Testing Priorities

Every cloud migration strategy begins with a thorough risk evaluation. IT leaders must analyse the Source System, Target System, application dependencies, and the type of migration approach—whether rehost, replatform, refactor, or replace.

A clear risk profile helps prioritise:

- Which workloads demand strict data integrity validation

- Where performance testing must be deep, automated, and continuous

- Which services rely on security controls, user permissions, and compliance enforcement

To streamline this process, modern cloud migration tools and cost estimation platforms—such as migration cost calculators or performance analyzers—help teams align testing scope with operational budgets and cost management expectations.

The ultimate goal isn’t just moving workloads to the data cloud, but ensuring they run at optimised performance levels across cloud servers—without disruption, downtime, or degradation.

Architecture Validation: Network, Identity & Data Flows

A seamless data cloud migration relies heavily on validating the underlying architecture. Even minor misconfigurations in cloud servers, network topology, or identity management systems can lead to critical downtime and performance degradation. Proper architecture validation ensures that data flows, access controls, and identity policies remain consistent across the migration cloud Azure or migration cloud AWS environments.

Data Integrity & Consistency Testing

During cloud drive migration, maintaining data integrity is critical to ensure that information remains accurate, consistent, and secure throughout the transfer. Whether organisations use Microsoft cloud storage, data replication tools, or automated migration frameworks, each data movement must be validated through structured verification steps.

Partnering with a data integrity and performance testing company ensures every migration phase is thoroughly tested for accuracy, consistency, and speed—preventing data loss, corruption, or latency issues that could impact business continuity.

- Database Schemas remain consistent across cloud servers

- Checksum validation confirms end-to-end data cloud consistency

- Data Quality checkpoints safeguard against corruption during cloud drive migration

- Data Transformation rules execute accurately to preserve logical structure

Any mismatch in cloud migration data risks business disruption.

Network and Latency Performance Validation

Performance testing cloud ensures that migrated applications and data workloads operate efficiently in their new environment. It validates scalability, response times, and throughput across migration cloud AWS, ensuring business continuity and optimal resource utilization.

Once workloads run in cloud infrastructure, network performance must be validated across:

VPC / VNet Routing:

Cloud migration often alters how services communicate across networks. Validating VPC/VNet routing ensures correct traffic flow between subnets, on-prem systems, and cloud services—preventing latency issues, connectivity failures, or blocked inter-service communication.

DNS Resolution:

Post-migration DNS testing confirms applications are resolving to the correct cloud endpoints rather than legacy environments. Proper TTL, failover, and routing validation prevent misrouting, access delays, or service disruptions.

CDN Acceleration Paths:

Migration can change CDN behaviour and global content routing. Testing CDN acceleration paths ensures edge caching, regional routing, and TLS configurations continue to deliver low-latency performance across all user locations.

API Response Times:

Network paths and service dependencies shift after migration, so API latency must be re-evaluated. Testing ensures each microservice and integration meets SLA benchmarks under realistic load and concurrency conditions.

These tests confirm that real-world users experience stable performance—even when distributed globally.

Performance, Scalability, and Cost Optimisation Modelling

Cloud environments add new resource setups, autoscaling settings, and billing based on use. Performance testing ensures the application meets response time and throughput expectations under varying workloads. Scalability modelling checks how well the system can grow horizontally or vertically during traffic spikes.

It ensures no service slowdown. Cost optimisation analysis matches compute, storage, and network use with actual usage patterns. This prevents over-provisioning and reduces unnecessary cloud costs.

The cloud is designed for elasticity—but only when configured correctly.

Performance testing, load testing cloud environments, and auto-scaling validation ensure applications can:

- Scale under peak load

- Maintain throughput consistency

- Prevent bottlenecks in compute or storage

- Avoid unexpected cloud computing cost spikes

Here, teams validate:

- Virtual Machine Sizing Strategies – Testing helps identify the right VM size by balancing CPU, memory, and storage needs to prevent overprovisioning or throttling.

- Concurrency Limits – Validates how the system handles multiple users or transactions simultaneously to ensure stability and responsiveness under load.

- Queue and Cache Behaviour – Ensures queues process workloads efficiently and cache systems maintain fast data access without latency or stale data.

- Compute/Storage Bandwidth Tiers – Tests data transfer speeds and IOPS to match workload intensity, ensuring performance without unnecessary cloud costs.

Testing this before go-live prevents performance incidents that are costly to fix after deployment.

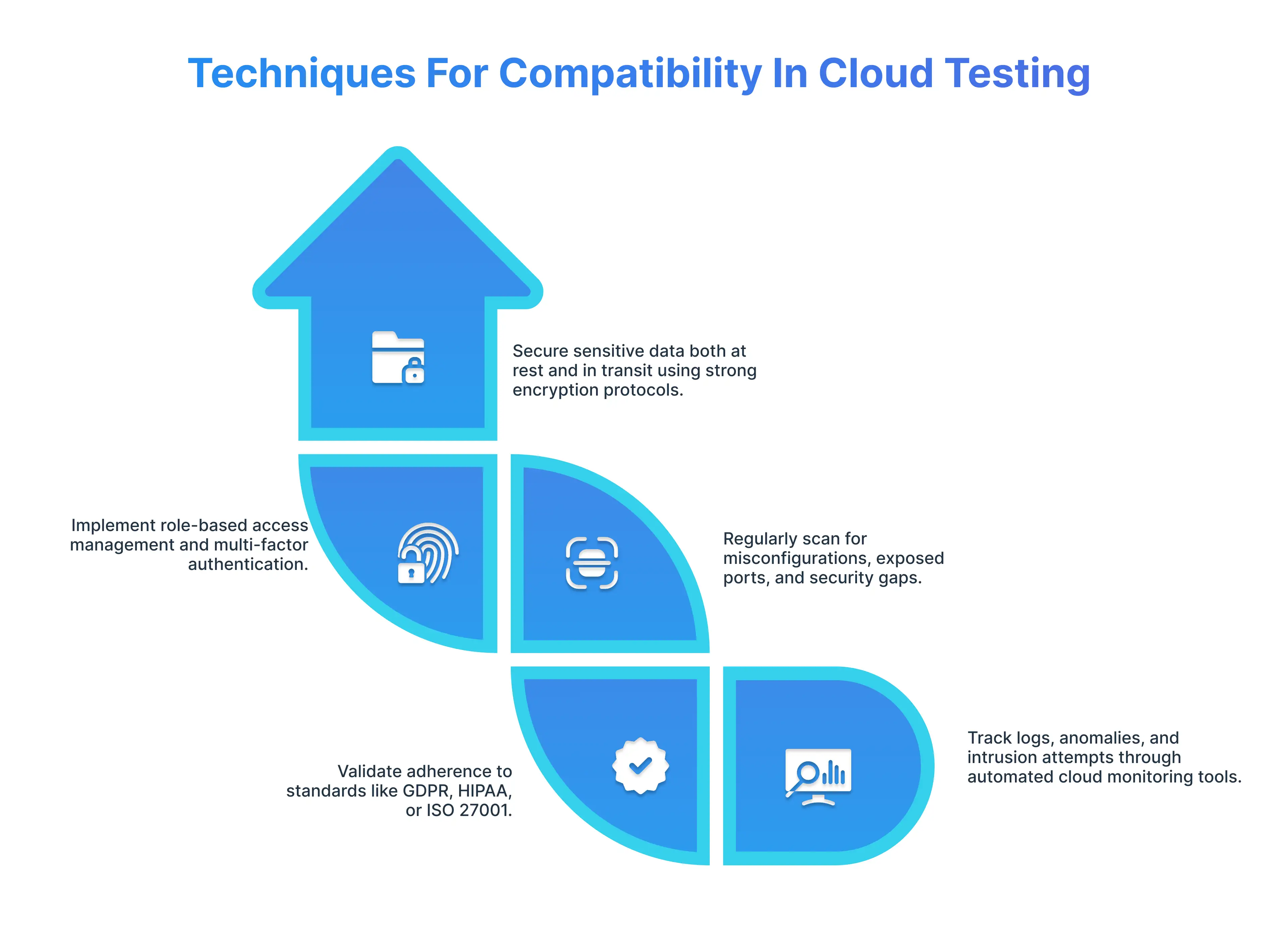

Security & Compliance Alignment

As workloads transition, cloud data security and cloud infrastructure security controls must be reinforced. Data must be protected from data breaches, unauthorised access, and misconfiguration.

Testing must validate:

- encryption protocols (at rest + in transit)

- User Permissions and identity mapping (IAM, Azure AD, SSO)

- security standards alignment (ISO, SOC2, GDPR, HIPAA)

- audit logs and monitoring system behaviour

- penetration testing and vulnerability scanning

Enterprises must verify not just security protocols, but compliance readiness for regulatory requirements.

This ensures enterprise-level business continuity and trust.

Platform Compatibility & Regression Testing

After migration, applications must continue to function consistently across the new cloud environment’s operating systems, runtime versions, middleware, and managed services. Compatibility testing checks that configurations, drivers, APIs, and dependencies work as expected. Regression testing checks that no functions break during refactoring, containerization, or re-architecture. It protects business continuity and user experience.

Even when the environment is technically correct, application behaviour may change due to:

- Runtime version differences

- API behaviour shifts

- Driver or library updates

- Platform-specific defaults

Regression Testing and integration testing verify that core Application Functionality remains unchanged across all workflows.

End-users interact with cloud applications from different browsers, devices, operating systems, and network conditions. Client-side compatibility testing is needed to keep performance steady, UI responsive, and functions accurate.

This involves performing:

- Cloud browser testing (validating UI and functionality across browser versions)

- Cross browser testing cloud environments (ensuring layout + script stability on Chrome, Edge, Safari, Firefox)

- Mobile testing cloud scenarios (verifying responsiveness and performance on mobile screen breakpoints)

- Cloud mobile app testing (testing native and hybrid applications on distributed real devices)

Cloud Testing Tools: Use Cases and Best-Fit Scenarios

Integrating Testing Into CI/CD — Automated Test Gates and Rollback Planning

Cloud migration testing should be embedded directly into CI/CD pipelines so every code change is validated before deployment. Automated test gates stop unstable builds from moving forward. Predefined rollback strategies let teams quickly revert if performance, security, or integration problems occur. This approach reduces release risk and supports continuous, reliable cloud delivery. Continuous testing is essential to maintain quality.

By embedding automated testing into CI/CD pipelines, Organisations can:

- Run test cases automatically during deployment

- Trigger rollback if performance, stability, or security thresholds fail

- Maintain predictable release cycles

This ensures the cloud migration is not a one-time event but a controlled, continually validated environment.

Business KPIs & Post-Migration Monitoring Strategy

After migration is complete, teams must continuously monitor performance, availability, and user experience to ensure the cloud environment meets defined business KPIs. This involves tracking response times, cost utilization benchmarks, SLA adherence, and customer journey metrics to measure real business impact.

By leveraging pay-per-use cloud testing services, organisations gain flexible access to performance and reliability testing without overcommitting resources. This approach enables continuous observability, early issue detection, and proactive performance tuning—ensuring the cloud environment consistently delivers measurable business value.

Conclusion — Why Testing Defines the Success of Every Cloud Migration

Cloud migration is not simply a technical transfer—it represents a complete business transformation. However, true value from migration only emerges when systems continue to operate securely, efficiently, and reliably on the new platform.

To achieve this, organisations must conduct comprehensive validation across performance, security, architecture, and user experience. Without rigorous testing, they risk outages, data inconsistencies, compliance failures, and expensive post-migration fixes.

Partnering with an on-demand cloud migration testing company helps businesses ensure smooth transitions by aligning testing depth with their unique workload requirements and budget expectations. Transparent cloud migration testing services pricing further enables teams to plan effectively and achieve measurable ROI.

Ultimately, Cloud Migration Testing is the hidden hero—it transforms cloud adoption from a leap of faith into a controlled, predictable, and ROI-driven process, ensuring the cloud delivers on its promise of flexibility, scalability, innovation, and long-term business advantage.

FAQ

Q. What applications would best be on cloud migration?

Scalable workload applications, API-based structures, and modular or container-native designs are the best applications in cloud migration. The migration of legacy monolithic systems can also be done, although in most cases, they have to be refactored to work effectively in the cloud.

Q. What is the approximate timeframe of a standard cloud migration testing?

The time of testing will differ depending on the complexity of the application and the amount of data. Each cycle has an average of 2-8 weeks and consists of functional validation, data integrity checks, performance benchmarking and security checks.

Q. Which are the performance metrics that are to be checked after cloud cutover?

The most important measures are the latency, response time, throughput, CPU/memory usage, and storage IOPS. Besides, the cost KPIs, auto-scaling behaviour and user experience measures can be monitored to keep the migrated environment efficient.

Q. Are hybrid cloud environments to be tested differently?

Yes. Hybrid configurations consist of the combination of on-prem and cloud, and therefore testing should confirm network routing, identity federation, API reliability, and data synchronisation between environments. This guarantees smooth continuity of operations.

Q. What is the way to determine the cost variation before and after migration in the businesses?

Cloud cost calculators, workload profiling, and performance simulation enable businesses to estimate costs before. In the post-migration, the regular cost monitoring and auto-scaling policy tuning serves to keep the cost efficient and prevent overruns.

%201.webp)