Artificial Intelligence (AI) is no longer experimental - it is now at the core of digital transformation across industries. From Generative AI tools like ChatGPT and conversational tools to Machine learning, computer vision, and Natural Language Processing, AI is reshaping how modern software systems are designed, deployed, and scaled. Platforms such as Google Cloud AI and IBM Watson enable faster innovation using pre-trained models, while emerging areas like AR/VR, predictive maintenance, and cloud computing push AI adoption even further.

However, rapid AI integration also introduces serious risks—biases in training data, concept drift, lack of model robustness, regulatory non-compliance, and hidden defects that impact system quality and customer satisfaction. This is where quality assurance becomes mission-critical.

QA teams ensure that AI-powered systems are safe, scalable, and reliable, embedding software testing across the SDLC. By combining test automation, continuous testing, and AI-driven techniques, QA teams safeguard data integrity, meet regulatory standards, and reduce business risk them indispensable to modern AI initiatives.

The Role of Quality Assurance in AI Development

As Artificial Intelligence becomes deeply embedded in business-critical software, the role of quality assurance evolves from defect validation to risk governance. AI systems influence autonomous decision-making, customer interactions, and regulatory-sensitive operations, making QA a foundational pillar for system quality, scalability, and trust.

Unlike traditional applications, AI models continuously learn, adapt, and respond to changing inputs. QA teams therefore act as guardians of reliability, ensuring AI systems behave safely across environments, datasets, and usage patterns—both expected and unforeseen.

Understanding Quality Assurance in AI-Driven Software

Quality assurance is a structured, proactive discipline that ensures software meets functional, performance, security, and regulatory requirements throughout the SDLC. In AI-driven software, QA goes far beyond conventional Quality control by validating not just code, but data, models, and decisions.

Expanded Scope of QA in AI Systems

Modern AI QA must address:

- Training data validation

Ensuring data integrity, representativeness, balance, and absence of systemic biases. Poor data quality directly translates into unreliable model behavior. - Algorithm behavior validation

Verifying consistent outputs across datasets, environments, and retrained versions while assessing model robustness under stress. - Explainability and transparency

Especially in regulated domains like medical devices and food and beverages, QA teams must confirm AI decisions can be interpreted and audited. - Ethical and regulatory compliance

Ensuring adherence to regulatory standards, privacy laws, and industry-specific requirements.

Core Pillars of an Effective AI QA Strategy

A mature QA strategy includes:

- Early defect detection through shift-left testing

- High test coverage across unit tests, API tests, and regression tests

- Static analysis and mandatory code review for AI pipelines

- Risk-based impact analysis for model changes

- Continuous feedback loops via CI/CD pipelines and continuous testing

For AI systems, QA must also validate:

- Hyperparameter configuration

- Synthetic data accuracy and relevance

- Model performance across rare and real-world edge cases

The Unique Challenges of AI Systems

AI systems are probabilistic, not deterministic. This fundamental difference introduces testing challenges that traditional software QA is not designed to handle.

Key AI-Specific QA Challenges

- Biases in training data

Even minor data imbalance can amplify unfair or unsafe decisions at scale. - Concept drift

Model accuracy degrades over time as real-world data changes, requiring continuous validation. - Limited test data for rare scenarios

Critical failures often occur in low-frequency edge cases. - Probabilistic output validation

Outputs are not binary pass/fail, making acceptance criteria more complex. - Regulatory requirements

AI used in regulated sectors must demonstrate predictable, auditable behavior.

According to findings highlighted in the World Quality Report, AI initiatives frequently fail due to:

- Low QA maturity

- High defect leakage rate

- Insufficient process agility

Without robust QA governance, enterprises experience increased Mean Time to Detect and Mean Time to Repair, eroding trust and compliance readiness.

Test Automation: The Backbone of Efficient QA

What is Test Automation in AI Contexts?

Test automation involves executing test cases using automation scripts, test scripts, and Testing Frameworks to minimize manual effort while maximizing accuracy and repeatability.

In AI ecosystems, automated testing enables validation across:

- Distributed microservices

- Scalable cloud environments

- Multi-platform Platforms and devices

Commonly Used Automation Tools

- Selenium and Appium for UI and mobile testing

- TestComplete, Katalon Studio, and Appvance for enterprise automation

- JMeter for performance testing and load validation

- HeadSpin for real-device cloud testing

- Axivion for advanced static analysis

Automation ensures:

- Consistency across test environments

- Integration with Version control

- Seamless alignment with DevOps and CI/CD

How AI Transforms Quality Assurance: Key Advantages of Leveraging AI in QA

Artificial Intelligence is rapidly redefining how quality assurance operates in modern software development. By embedding AI into QA processes, organizations can move beyond manual testing limitations and adopt smarter, faster, and more predictive testing approaches. Leveraging AI in QA enables teams to improve accuracy, accelerate delivery, and ensure consistent application quality across complex and evolving systems.

1. Expedited Timelines

AI accelerates the QA lifecycle by automating repetitive testing tasks and intelligently prioritizing test execution. With AI-driven automation and continuous testing, teams can shorten release cycles, reduce regression time, and deliver features faster without compromising quality.

2. Well-Researched Build Release

AI analyzes historical defects, test results, and production data to assess release readiness. This data-driven insight helps QA teams make informed go/no-go decisions, reducing the risk of unstable or low-quality releases reaching end users.

3. Effortless Test Planning

AI simplifies test planning by automatically identifying critical areas of the application based on risk, usage patterns, and past failures. This allows QA teams to focus on high-impact test scenarios instead of manually creating exhaustive test plans.

4. Expanded Role of a Tester

With AI handling repetitive execution, testers evolve into quality strategists. Their role expands to include exploratory testing, ethical AI validation, usability analysis, and collaboration with developers and product teams on system quality and risk management.

5. Predictive Analysis

AI-powered predictive analytics forecasts defect-prone areas, performance issues, and potential failures before they occur. This proactive approach helps teams prevent defects early rather than reacting after issues surface in production.

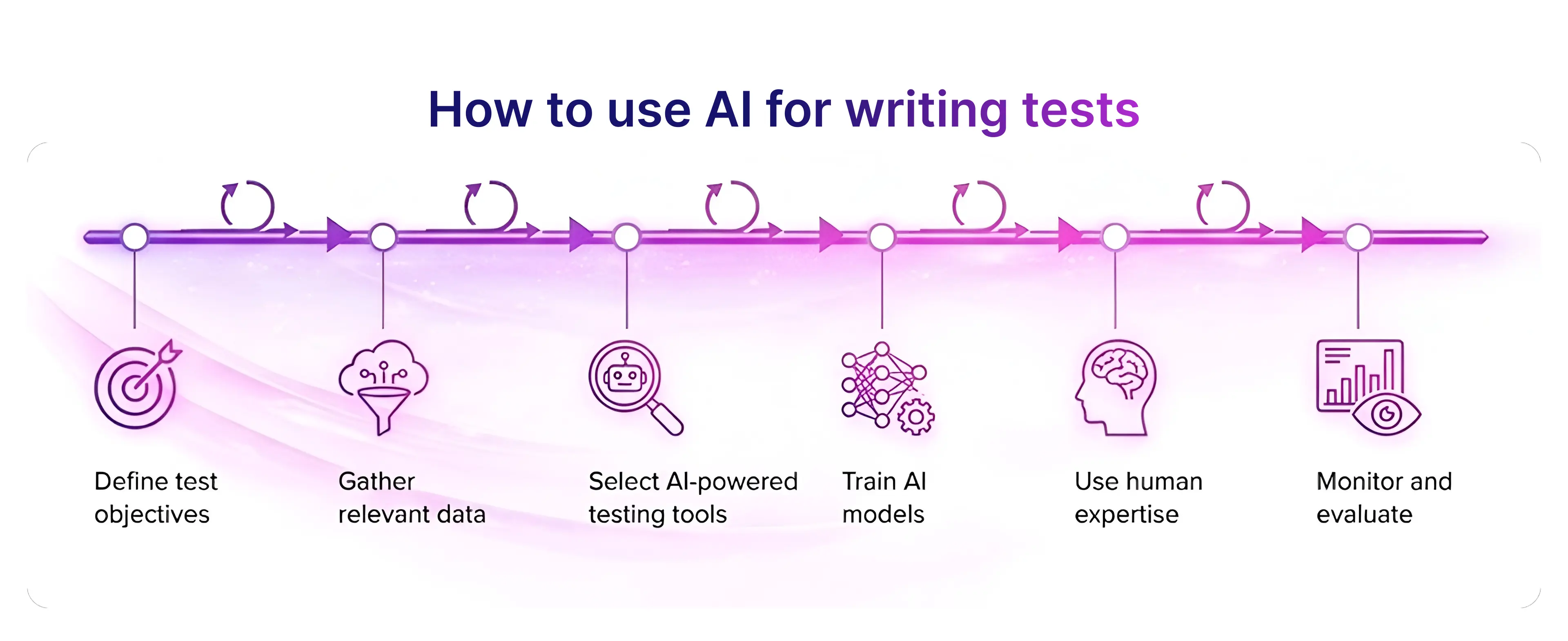

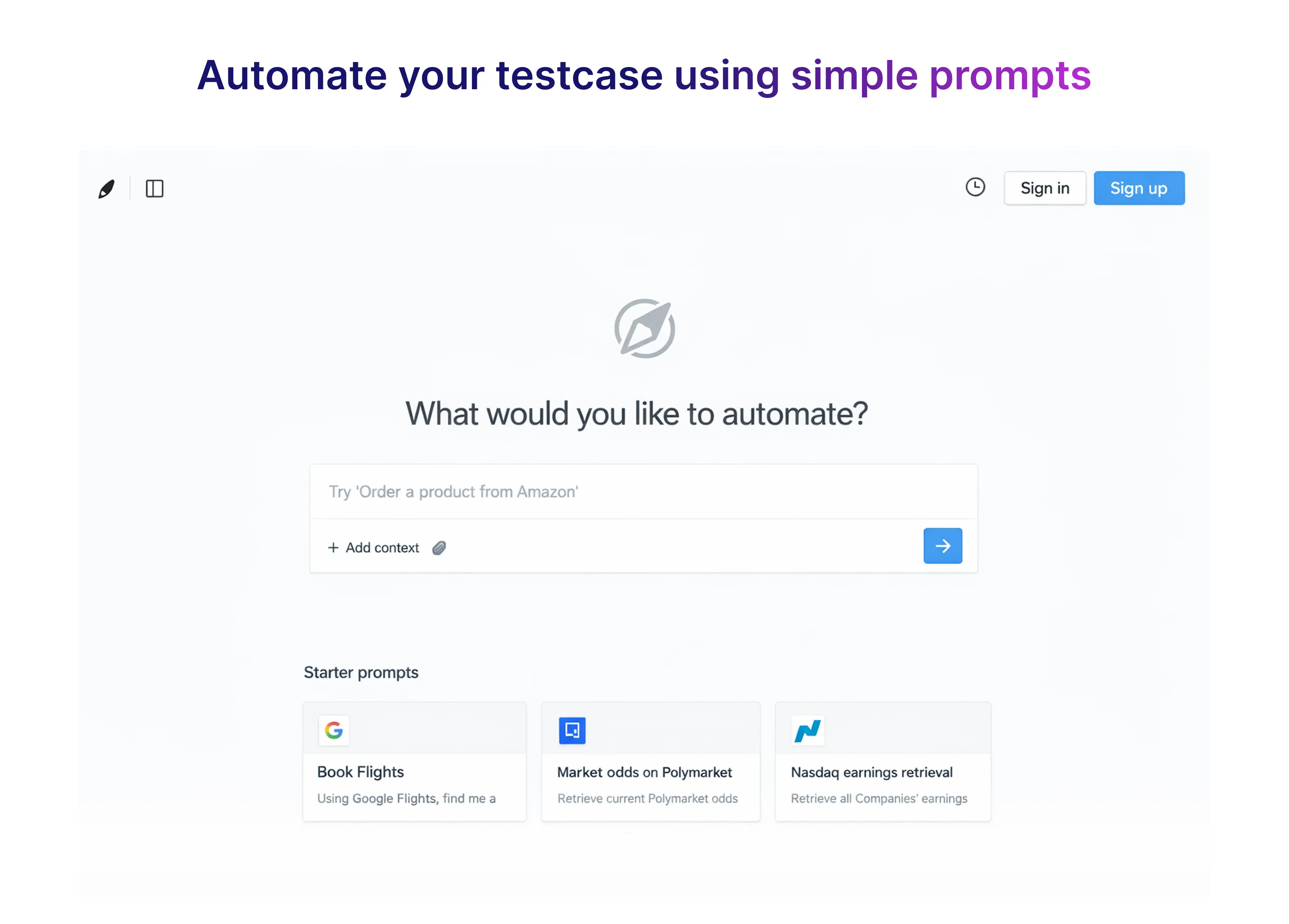

6. Enhanced Writing of Test Cases

Using Natural Language Processing and AI-driven test case generation, testers can create high-quality test cases faster. AI converts requirements, user stories, and acceptance criteria into structured, reusable test cases with better coverage and accuracy.

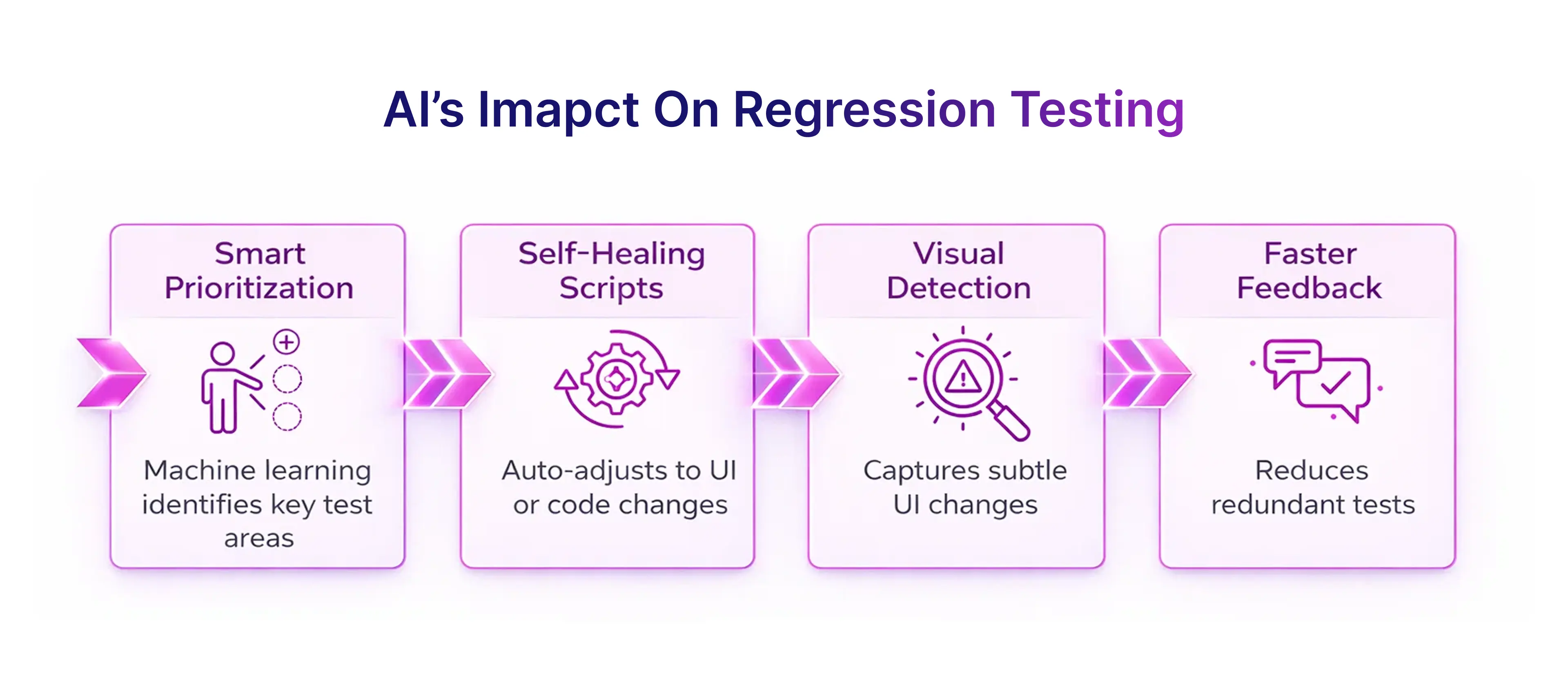

7. Improved Regression Testing

AI optimizes regression testing by selecting the most relevant test cases based on recent code changes and impact analysis. This ensures faster regression cycles while maintaining confidence that core functionalities remain stable.

8. Visual User Interface Testing

AI-powered visual testing detects visual bugs, layout shifts, and UI inconsistencies across browsers, devices, and resolutions. This goes beyond pixel comparison to understand visual intent, ensuring a consistent and user-friendly interface.

9. Enhanced Defect Tracing

AI improves defect tracking by correlating test failures with code changes, environments, and historical data. This speeds up root cause analysis, lowers Mean Time to Detect (MTTD), and helps teams resolve issues more efficiently.

10. Continuous Integration and Delivery (CI/CD) Enhancement

AI strengthens CI/CD pipelines by automatically triggering relevant tests, analyzing results in real time, and blocking risky builds. This ensures continuous quality validation aligned with DevOps and rapid delivery goals.

11. Improved Test Coverage

AI identifies untested paths, edge cases, and rarely executed scenarios that manual testing often misses. By expanding test coverage intelligently, QA teams reduce defect leakage and improve overall system reliability.

12. Efficient Bug Detection and Application Verification

AI enhances bug detection accuracy by recognizing complex patterns and anomalies in application behavior. This results in earlier detection of critical issues and more reliable application verification across environments.

13. Resource Optimization

By automating repetitive tasks and prioritizing high-value testing activities, AI helps organizations optimize QA resources. Teams can achieve more with fewer manual efforts, lowering costs while improving productivity and quality outcomes.

As software systems grow more intelligent and interconnected, AI-powered QA becomes essential rather than optional. From predictive insights and automated regression to optimized CI/CD pipelines and enhanced test coverage, AI elevates quality assurance into a strategic capability. Organizations that embrace AI-driven QA gain faster releases, reduced risk, and higher confidence in delivering scalable, user-centric applications.

H2-Regression Testing: Ensuring Stability in AI Systems

Why Regression Testing is Critical for AI

Regression testing verifies that system updates do not introduce new defects or break existing functionality. In AI projects, regressions often stem from:

- Model retraining

- Data pipeline changes

- MLOps workflow updates

Risks of Inadequate Regression Testing

Without robust regression tests, organizations face:

- Undetected visual bugs

- API failures in cloud computing environments

- Performance bottlenecks

- Security vulnerabilities

Many high-profile AI failures can be traced to insufficient regression coverage and poor impact analysis.

Strategies for Effective AI Regression Testing

Best practices include:

- Automated regression testing within CI/CD

- Visual regression testing to detect UI changes and visual inconsistencies

- Dataset and model versioning for training data

- Observability using Grafana dashboards

- Reusable unit test fixtures

Tools such as Selenium, TestComplete, and Katalon Studio enable scalable regression validation without slowing innovation.

Six Levels of AI QA Testing

Level Zero: Manual and Script-Based Testing

At Level Zero, QA relies heavily on manual testing and basic test scripts. Adding a new feature—such as a form field—requires adding or updating multiple test cases. Even small UI changes often trigger a full test execution cycle.

Testing at this level is repetitive and reactive. When failures occur, testers manually analyze failed tests to determine whether the issue is a genuine bug or a baseline change caused by updated software behavior. There is no AI assistance, and test maintenance effort is high.

Level One: Assisted Automation with Basic AI Support

At Level One, AI begins to assist QA rather than replace human effort. The application still relies on traditional test automation frameworks, but AI helps optimize test execution and maintenance.

For example, instead of checking individual fields separately, AI can analyze the page holistically and determine whether changes are expected or suspicious. AI can help auto-update test checks when changes are intentional, while still flagging unexpected failures for human review. Human intervention is still required to approve test outcomes.

Level Two: AI-Assisted Visual and Semantic Testing

At Level Two, AI is actively used to test visual elements and user-facing changes. QA teams leverage AI to validate layouts, UI consistency, and visual correctness instead of relying solely on pixel-by-pixel comparisons.

AI also understands semantic changes—such as when the same UI element appears on multiple pages—and groups related changes together. Testers can then accept or reject changes as a set, significantly reducing test maintenance time while improving accuracy.

Level Three: Autonomous Analysis and Intelligent Decision-Making

At this level, AI takes over most test evaluation tasks. Human intervention is minimal and primarily focused on oversight.

AI models analyze application behavior, visual changes, and functional results against learned patterns and design standards. Using machine learning, the system identifies genuine defects, ignores acceptable changes, and adapts over time. The AI continuously learns from historical outcomes to improve future test decisions.

Level Four: Self-Driven Testing and Exploration

Level Four marks the transition to self-driven AI testing. AI no longer just evaluates test results it actively explores the application.

The system understands page structures, user flows, and interaction patterns. It analyzes how users navigate the application, visualizes behavior, identifies risk areas, and automatically generates and executes relevant test scenarios. Reinforcement learning enables the AI to improve test strategies over time with minimal human input.

Level Five: Fully Autonomous AI QA

Level Five represents a future-forward vision of QA. At this stage, AI independently designs, executes, evaluates, and optimizes tests across the entire application lifecycle.

The AI communicates with development pipelines, adapts to application changes, and continuously validates quality without manual intervention. While this level is still largely experimental, elements of it already exist in automated testing, AI-assisted QA, and self-healing frameworks. Human involvement focuses on strategy, governance, and ethical oversight rather than execution.

Building a Robust QA Team for AI Integration

Skills and Expertise Needed

Modern QA professionals require cross-disciplinary expertise, including:

- AI and Machine learning fundamentals

- MLOps and data validation

- software testing and test automation

- Understanding regulatory standards

- Experience with cloud environments and virtualization

QA teams must also understand model robustness, ethical AI concerns, and compliance requirements for regulated sectors such as manufacturing and healthcare.

Collaboration Between QA and Development Teams

Successful AI integration depends on strong collaboration across teams using Agile, DevOps, and shift left testing practices. Continuous feedback loops, shared ownership of quality, and early testing reduce late-stage failures.

Integrated workflows with Version control, automated code review, and shared metrics help align QA with development and business stakeholders—delivering measurable business solutions and development solutions.

Conclusion

QA teams are the backbone of safe, scalable, and trustworthy AI systems. From software testing and automation to AI-driven validation and compliance, QA ensures that AI innovation does not compromise safety, ethics, or reliability.

Organizations that invest in strong QA practices—supported by automation, continuous testing, and skilled professionals—achieve faster releases, better scalability, and higher customer satisfaction. As AI adoption accelerates, prioritizing quality assurance is no longer optional—it is a strategic necessity.Now is the time for enterprises to elevate QA as a core pillar of their AI strategy—ensuring trust, compliance, and long-term success.

FAQ’s

1: Why is QA more important in AI systems than in traditional software?

AI systems learn from data and can behave unpredictably over time. QA teams validate not only functionality but also data quality, model drift, bias, and ethical behavior—areas not covered by traditional testing.

2: How do QA teams help prevent biased or unsafe AI outcomes?

QA teams test AI models against diverse datasets, edge cases, and fairness metrics. They identify bias, unintended discrimination, and unsafe decision patterns before deployment, reducing real-world risks.

3: What role does QA play in scaling AI across an organization?

QA ensures AI models remain stable, accurate, and performant as data volume, users, and integrations grow. Automated testing, monitoring, and regression checks enable safe scaling without degrading model behavior.

4: How do QA teams validate AI model reliability in production?

QA teams implement continuous testing, monitoring, and feedback loops to detect model drift, performance drops, and data anomalies, ensuring the AI adapts safely to changing real-world conditions.

5: Can AI systems be compliant without strong QA processes?

No. QA teams verify compliance with regulatory, security, and explainability requirements. They ensure auditability, traceability, and transparency—critical for meeting legal and industry standards in AI deployments.

%201.webp)