The rapid growth of Artificial Intelligence (AI) is due to a combination of the advancement of computer hardware and improvements in software. Many industries worldwide are utilizing Nvidia GPUs for their AI Models, which continue to provide the foundation and infrastructure needed for Building AI models in large volumes, with continued improvements in speed and efficiency.

In addition, the increase in GPUs and the demand for improved performance have transformed how companies build and deploy AI, with an emphasis on real-time applications. The increase in AI through the use of Nvidia GPUs will continue to mold the future AI Ecosystem globally.

✨ Key Insights from This Article:

🚀 Why GPUs are the core engine behind the AI boom in 2026 and how their parallel architecture enables faster training, scalable inference, and real-time AI systems

🧠 How GPU-first architectures outperform CPUs for modern AI workloads, powering large language models, generative AI, and agentic AI at scale

🏗️ Where AI infrastructure bottlenecks emerge—from GPU shortages and networking constraints to energy limits and data center design challenges

⚙️ How advances in GPU platforms, high-bandwidth memory, and networking ecosystems are reshaping AI development, deployment, and optimization

🌍 Why strategic access to GPU-driven compute defines competitive advantage, influencing AI monetization, enterprise resilience, and long-term business growth

Introduction: The Unstoppable Ascent of AI and Its Core Engine

AI will have become part of standard operating procedures for companies by 2026. The use of AI in various industries has enabled companies to compete differently in these sectors through technological advances. The GPU serves as the fundamental driver for the growth of AI development, given its ability to facilitate the rapid processing speeds required for creating new AI technologies. Businesses must thus fully comprehend the GPU infrastructure that enables the successful implementation of AI development services and AI solutions within their organizations and the optimal ways in which to leverage AI.

- AI adoption has accelerated across industries due to mature generative AI tools and AI assistants.

- Large language models now drive search, automation, and customer engagement.

- Compute availability has become a board-level concern for AI-driven companies.

For companies exploring AI-led growth, Frugal Testing views GPUs not as optional hardware but as the backbone of reliable and scalable AI infrastructure. Their role explains why the global AI industry continues to expand at record speed.

Why GPUs Have Become the Backbone of the Global AI Boom

Created for video games, GPUs (graphics processing units) have transformed from a peripheral device to an integral part of the AI workload engine. The GPU’s ability to perform multiple tasks in parallel (parallel processing) vastly outperforms the CPU (central processing unit) in terms of efficiency when developing and training deep learning (training neural networks) and generative models (creating or manipulating images, music, or video). In 2026, nearly every serious AI agent, AI assistant, or agentic AI system relies on GPU acceleration.

- GPUs process thousands of operations simultaneously, unlike sequential CPUs

- Modern AI GPUs dramatically reduce latency and improve bandwidth utilization.

- Cloud GPU access enables AI-as-a-Service business models

As AI models grow in size and complexity, GPUs remain the most practical and proven solution for scalable AI compute, reinforcing their central role in the AI boom.

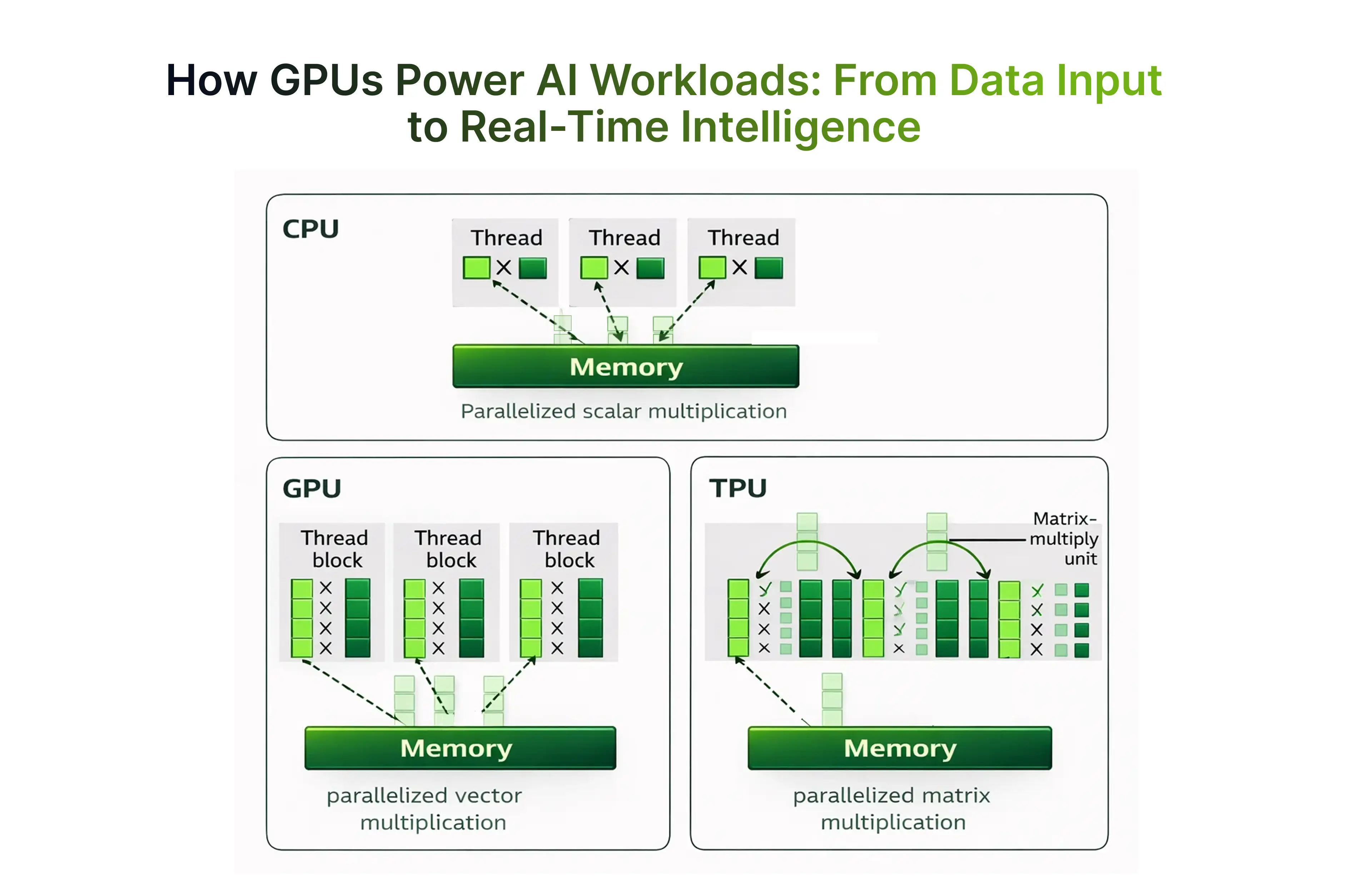

Why GPUs Are Architecturally Superior for AI Workloads

The question many decision-makers ask is simple: what makes a GPU better than a CPU for AI? The answer lies in architecture. GPUs are purpose-built for high-throughput workloads, making them uniquely suited for training and inference across modern AI models.

- GPUs outperform CPUs in matrix multiplication and tensor operations

- AI chips leverage parallelism to reduce training time

- GPU hierarchy allows optimized workloads across cloud and edge deployments

For enterprises deploying AI infrastructure at scale, these architectural advantages translate directly into faster time-to-market and lower operational risk.

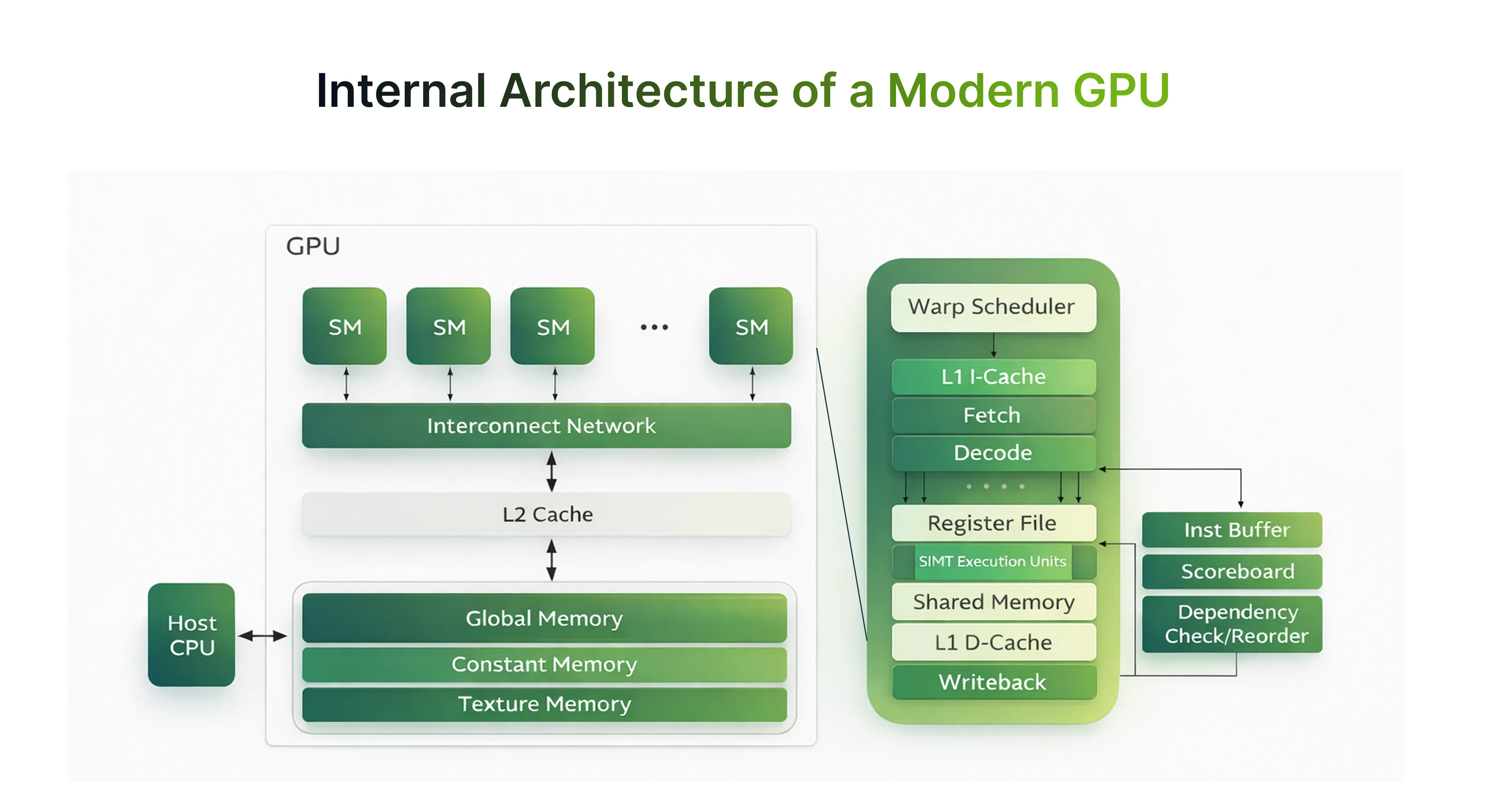

The following diagram breaks down the internal components of a modern GPU and how they work together to accelerate AI workloads. It highlights how SMs, memory hierarchies, and warp scheduling enable massive parallel processing and efficient data flow.

Massive Parallelism, AI-Optimized Cores, and High-Bandwidth Memory

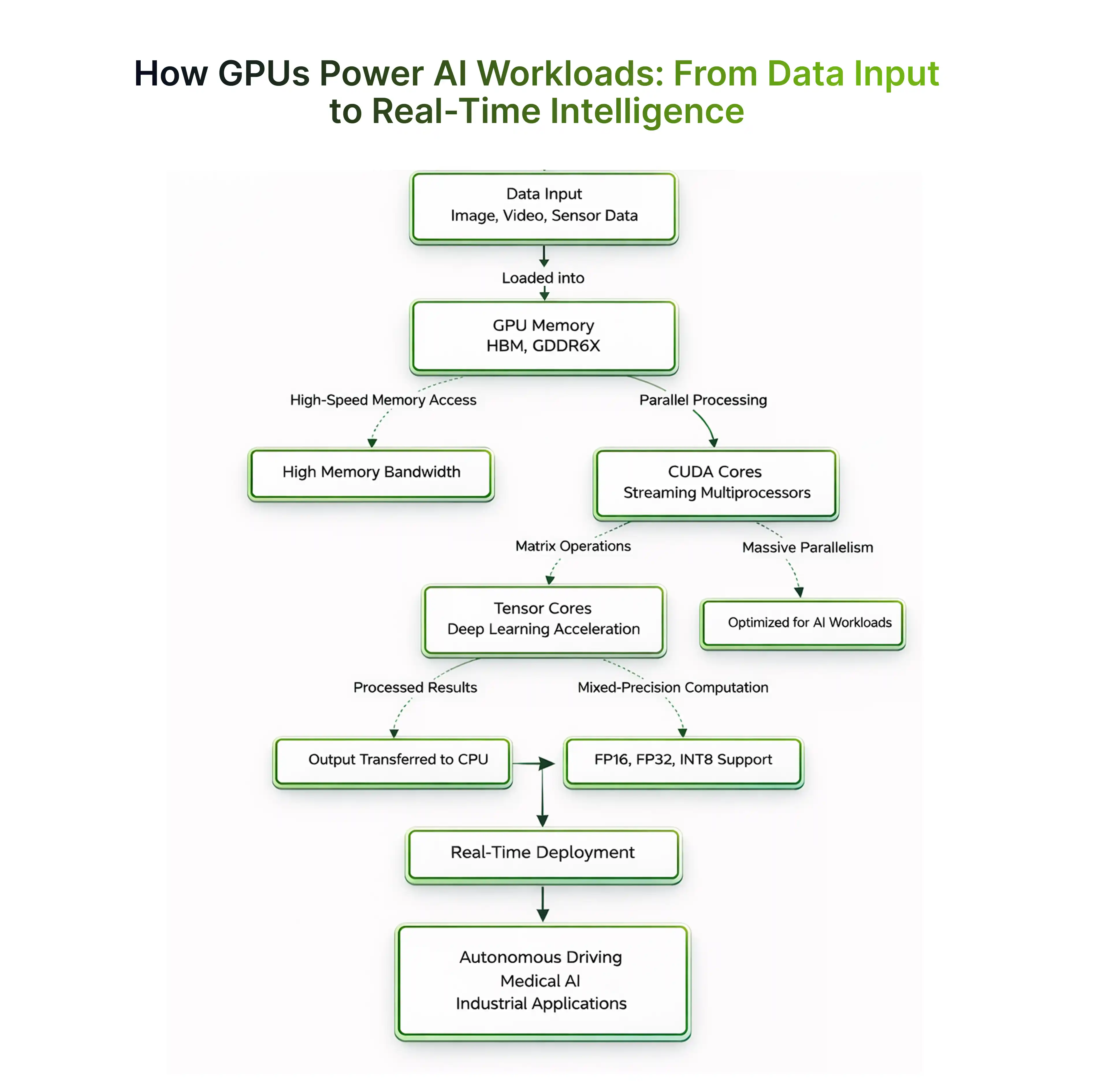

The next generation of GPUs incorporates this architectural design into their product line. This includes Nvidia's H100, GH200 series GPUs, as well as Nvidia's Blackwell platforms. These systems are designed to efficiently move massive datasets, which is essential for large language models and the inference phase.

- High-bandwidth memory, such as GDDR6 and LPDDR5X, accelerates training.

- Memory support from manufacturers such as Micron ensures reliable throughput.

- Low-latency access enables real-time AI applications.

This architectural sophistication explains why GPUs dominate the AI accelerator market and continue to outpace ASICs, TPUs, and custom silicon alternatives for general-purpose AI.

This advantage is most visible when comparing how CPUs and GPUs handle memory bandwidth during AI workloads.

Below diagram illustrates how GPUs process AI workloads end to end, from ingesting raw data to delivering real-time outcomes. It highlights the role of GPU memory, parallel processing, CUDA and Tensor cores, and mixed-precision computing in accelerating modern AI applications.

The 2026 GPU Landscape: Platforms, Competition, and Demand

The rapidly growing demand for GPUs will create a huge opportunity in the marketplace, with new platforms being developed and significant competition emerging among many companies. High-performance computers (HPCs), commercial users, and various government entities are all scrambling to secure their share of available GPUs, ultimately changing how semiconductors are manufactured and how artificial intelligence (AI) chips are designed.

- XPU demand continues to exceed supply across regions

- AI clusters and supercomputers rely on GPU-first architectures

- Market capitalization and P/E ratio metrics reflect investor confidence

This environment has turned GPUs into a strategic asset rather than a commodity.

NVIDIA’s Leadership, Rising Competition, and the Ongoing GPU Shortage

NVIDIA remains the dominant force in AI GPUs, with platforms like CUDA, the CUDA software ecosystem, and NVIDIA GeForce driving adoption. Products such as the Blackwell platform, Rubin architecture, and Drive platform extend Nvidia AI leadership beyond data centers into edge AI chips and autonomous vehicles.

- NVIDIA GPUs outperform rivals in software maturity and ecosystem depth

- Competition from AMD GPUs, TPUs, and ASICs is increasing, but fragmented

- Fabrication dependencies on TSMC and global supply chains limit availability

For enterprises, this shortage underscores the importance of planning AI infrastructure early and strategically.

Understanding how GPUs compare to CPUs and TPUs helps organizations choose the right hardware amid ongoing supply constraints.

The Infrastructure Powering AI at Scale

GPUs alone do not power AI at scale; they depend on a complex data center and networking ecosystem. In 2026, AI infrastructure design is as critical as model architecture, making AI consulting services essential for organizations deploying AI at scale.

- Hyperscaler Cloud providers deploy million-GPU cluster strategies

- AI infrastructure investments focus on reliability and efficiency

- Strategic Compute Reserve concepts are gaining traction

This shift has elevated infrastructure design to a competitive differentiator.

High-Speed Networking, Data Center Design, and Energy Constraints

High-speed networking technologies like InfiniBand, Ethernet standards, and Broadcom Tomahawk 6 switch platforms enable GPUs to operate as unified systems. Modern data centers now prioritize low latency, energy efficiency, and scalable interconnects (see our performance testing services for how we validate such architectures).

- Ethernet Bloc and Ultra Ethernet Consortium standards support AI workloads.

- Bandwidth optimization is critical for AI clusters and Superpod deployments.

- Energy constraints influence the geographic placement of data centers

These considerations shape how AI services are delivered globally and locally.

How GPUs Are Transforming AI Development in 2026

The impact of GPUs extends beyond infrastructure into how AI is built, tested, and deployed. Faster iteration cycles have fundamentally changed AI development practices.

- GPUs reduce training cycles from months to days

- Scalable inference supports real-time AI tools

- Agentic AI systems rely on continuous GPU availability

This transformation benefits both startups and large enterprises.

Faster Model Training, Scalable Inference, and New AI Paradigms

GPUs enable rapid experimentation with deep learning and generative AI architectures. Organizations using OpenAI platforms, Microsoft AI services, and Meta Platforms leverage GPU acceleration to deploy AI agents at scale.

- Faster inference phase performance improves user experience

- AI models adapt continuously using GPU-backed pipelines

- New paradigms like agentic AI and tokenized incentive models emerge

These advances highlight why GPUs remain essential to innovation.

The Economic and Strategic Impact of GPU-Driven AI Growth

GPU-led AI growth is reshaping global economics and corporate strategy. Access to compute now defines competitive advantage.

- AI monetization supercycle drives new revenue streams

- AI chip and AI accelerator market valuations continue rising

- Government policies like the CHIPS and Science Act influence supply

Organizations that plan strategically gain long-term resilience.

Monetization, Compute Accessibility, and Competitive Advantage

These economic and strategic shifts are most visible in how organizations monetize AI and gain access to GPU-powered compute at scale. AI-driven companies monetize intelligence through AI-as-a-Service, Web3 integrations, and enterprise automation. GPUs make this monetization scalable and reliable creating recurring revenue and defensible business models.

- Hyperscalers and Spheron-style platforms democratize GPU access, reducing capital barriers and accelerating time-to-market

- Compute accessibility levels on the playing field for mid-sized firms, allowing them to compete with larger enterprises

- Strategic AI investments improve long-term market cap performance and investor confidence

For service-driven companies like Frugal Testing, this means helping clients validate, test, and optimize custom AI development services and AI systems built on GPU infrastructure.

Conclusion: GPUs as the Enduring Engine of AI’s Future

As the AI industry matures, GPUs continue to stand out as the enduring engine behind innovation. Graphics Processing Units (GPUs), including Nvidia graphics cards, GeForce platforms, and data centre–grade AI chips, remain the most versatile and efficient hardware for handling complex and multifaceted AI workloads. Despite growing competition from alternatives like TPUs, ASICs, and RISC-V architectures, GPUs continue to dominate as the primary foundation for scalable AI solutions.

One of the key reasons for this dominance is GPUs’ ability to support the entire AI lifecycle, from model training to real-time inference. Their well-established software and developer ecosystem significantly reduces operational and integration risks for enterprises, making adoption faster and more reliable across industries.

For organizations planning AI investments in 2026, strategic alignment around GPU-driven infrastructure is no longer optional. Thoughtful planning that combines the right hardware, supporting software, and rigorous testing ensures sustainable AI growth and long-term performance at scale.

People Also Ask (FAQs)

Q. How do GPUs compare to TPUs and custom AI accelerators for general-purpose AI workloads?

Ans. GPUs offer broad flexibility and strong performance across many AI models, while TPUs and custom accelerators can be more efficient for specific workloads but are less versatile.

Q. What factors should organizations consider when choosing GPUs for AI projects in 2026?

Ans. Organizations should evaluate performance (TFLOPS/memory), power efficiency, ecosystem support, cost, and compatibility with frameworks like PyTorch or TensorFlow.

Q. How does GPU availability impact AI startups versus large enterprises?

Ans. Limited GPU availability can bottleneck startups with smaller budgets, while large enterprises can secure supply and scale deployments more easily.

Q. Are consumer-grade GPUs viable for enterprise or production AI use cases?

Ans. Consumer GPUs can work for prototyping and small workloads, but enterprise AI generally needs datacenter-grade GPUs for reliability, support, and scalability.

Q. What long-term risks could affect the sustainability of GPU-driven AI growth?

Ans. Risks include supply chain constraints, rising energy demand, hardware bottlenecks, and the need for new architectures as model complexity increases.

%201.webp)